Custom rolling authentication in .NET for fun! Part 1

2024-06-06

Recently, I've embarked on writing a series of blog posts to document my journey in building a system from the ground up. One of the key features of this system is a login panel, which requires robust authentication. Instead of relying on existing authentication providers, I decided to dive deep and understand how authentication truly works. My aim is to learn from the work of the giants rather than merely using their solutions.

I'm especially keen on exploring modern authentication methods like authenticator-based 2FA codes and passkeys. While I use these tools daily, I don't fully understand the technology behind them. I want to uncover how they operate and see if I can implement them myself, though that's probably a challenge for another day!

In this project, I'll start by creating a simple authentication package in .NET, beginning with the basics of username and password authentication. Next, I'll add role-based access to an API, and possibly whip up a quick front-end. Once the fundamentals are in place, I'll move on to experimenting with more advanced protocols.

As such, we shall start as many do, by creating an empty API project in .NET (As I'll be rolling most stuff), which I can just follow the typical file -> new project flow in rider/VS/Dotnet CLI, I won't walk you through this part, it's boring and there are more articles and resources on how to do it, than I can even imagine.

So, obviously now I have my API, I can start adding auth yes? Well, no, not really, I intend for this to be packaged as a NuGet package so that, should the need arise I can utilize it within any of my own projects should I decide once again that I don't want to use off the shelf solutions. So, of course the first thing to do is add a class library to my solution, once again, I'll the skip the how-to and assume you can find out how to do this from the menagerie of existing tutorials and guides on this thing we call the web.

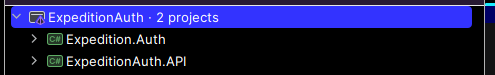

Now that all that is accomplished, we have a solution that looks like the following image.

You'll notice no doubt that I've come up with a name I find suitable to this journey, or expedition into the inner workings of authentication. Catchy, no?

Names aside, we have both a .NET web API, which for the purposes of this initial process will act as our testing ground for these features, as well as allow me the ability to ensure my class library can be "installed" into the API nice and easy.

So, what's my first step you might wonder, well I think building out a SQL table and everything I need for it is probably the best starting point, As I am me, I shall eschew a "proper ORM" like EF, or dapper for this, mostly because I'm absolutely addicted to source code generators and how they move us away from reflection based code (Reflection, you are lovely, but it's time to move on; sorry, not sorry?), any who, I after looking through a few potential choices for a "source code generated" ORM, I settled on one called MapDataReader which is open source and looks like it'll do what I need. Now that obviously leaves me in the quandary of "what about migrations" and "generated queries"? Well, sod them! I'll just write SQL. (which I might add is probably one of the most valuable languages you can learn, it's pretty much used EVERYWHERE in the software dev/engineering universe, whether you like it or not)

Now this is a whole lot of non-authentication based text right here, so I'm just going to install the NuGet package and move on to actually sharing my findings and research and then trying to implement it in a logical and secure fashion.

So, first of all, we've got username and password authentication, I'm going to keep the main focus on this for the moment, we've all been there and done it, we've had to enter a username, be it some random name we get to come up with, or our email address in lieu of letting us make a random username, but a username, nonetheless! alongside some password that most likely had to match all sorts of what feels like random criteria like having symbols and numbers and different cased letters, and whatever other things people think will help, and sure they help, but why do they help? What is the point of having a password with a load of random junk in it? Well, the obvious first answer is, It's hard to guess; people you know, or who know you (or watch you...?) will have a hard time guessing it within the few attempts they are usually allowed or within a reasonable time frame, so that's one vector covered right? Perfect.

That protects you from people you know, sure; those who aren't that technical and might just want to get into your social media and like all the silly little pages about kids TV shows and what not, but we're talking about the virtual realm now, in addition to the physical. So how do we defend against all the clever people on the internet who aren't targeting us directly, but are after all the logins! Well, you'll be surprised to find out that, all the rules that login providers ask for, them symbols, numbers and variable case letters play a nice part in protecting you from a few of these on their own, but how? By making it more computationally expensive and more time consuming for a brute force attack.

Well, the most basic rule of storing a password is "Don't store that damn password in plaintext", which is very useful right? Because if you store them in plaintext then anyone who gets hold of them through any number of nefarious means can just use them, they don't have to do anything except find them, So how do we solve this particular problem? Well, we utilize something called password hashing, and what is password hashing? Well it's the art of obscuring the password in a way that cannot be reversed (Or rather, that is what it should be, I obviously cannot speak for the thousands/millions of people who store passwords).

Okay, So how does this work? Well, there are a number hashing algorithms that have been used historically, such as MD5 and SHA-1, these are probably the most well known and they basically turn your password into something else. But what are they turning it into, well if we look at some examples here.

password = 5baa61e4c9b93f3f0682250b6cf8331b7ee68fd8

test = a94a8fe5ccb19ba61c4c0873d391e987982fbbd3

t3!st = 4bcd5c4388c5aea2013d1f8bbd80909c0842c82a

What's actually happening, well a "hash" is generated for each word, this hash will come back the same for every time that specific term is used, i.e. the term "password" will always be turned into "5baa61e4c9b93f3f0682250b6cf8331b7ee68fd8" and this is intended, because if you have no way of matching the passwords together when using an algorithm that is designed to not be reversed, then there's no way to verify that the password the user has passed in, is correct. This is called being Deterministic.

But how does it generate the hash!? Well, each algorithm obviously does it differently, or else there wouldn't be much point in having more than one hashing algorithm, but lets push into a breakdown of how SHA-1 works. If we consider, like every good program on the internet, that the algorithm works in stages, and stage one for SHA-1 would be called the "Length Calculation" stage.

Now, if we consider that our input is "abc", this is 24 bits long and can be broken down into binary like so -

- 'a' = 01100001

- 'b' = 01100010

- 'c' = 01100011

As such, the total length is 3×8=243×8=24 bits. So now that we have the length that we require, we can move onto the "padding" stage, this describes a process in which bits are added onto the initial input until it reaches a certain size, for SHA-1 this is 64 bits shy of being a multiple of 512, as we have such a small input this is easy to describe as it basically means, we're going to pad this input until it reaches a total length of (512-64) = 448 bits, So for SHA-1 specifically a single '1' bit is appended to the input so that 01100001 01100010 01100011 becomes 01100001 01100010 01100011 1 and then the bit value 0 is appended until the value is 448 bits long, so an additional 423 0s are appended onto the value. The next part of this padding stage is that they take the length of the original input, convert it to bits, and add it as a 64-bit value, for example with our input the length is 24 so the algorithm adds the value 11000, but this is not a full 64-bit integer, this is 5 bits, therefore they then pad this value with leading zero's so it now becomes 00000000 00000000 00000000 00000000 00000000 00000000 00000000 00011000 and now we have 24 represented as a 64-bit integer, once this is calculated it is appended to the previous computed value and we end up with something like

01100001 01100010 01100011 10000000 00000000 ... 00000000 00000000 00000000 00000000 00000000 00000000 00000000 00011000

Where the ... represents the collection of repeated 0 bits until the 448 bits requirement is satisfied and we now have our 512 bits long value. Now, once this is done, the algorithm defines 5 32-bit variables which are used as a starting point for the hashing process and they are as follows

H0 = 0x67452301and is derived from the square root of 2.H1 = 0xEFCDAB89and is is derived from the square root of 3.H2 = 0x98BADCFEand is derived from the square root of 5.H3 = 0x10325476and is derived from the square root of 7.H4 = 0xC3D2E1F0and is derived from the square root of 11.

These are important values, as they are updated throughout the following stages to produce the values required for the final hash, I will not go into detail on how these operations occur as they're a little beyond me (maybe I'll delve into the different algorithms in isolated posts) but will be described loosely as we go on.

The next step that occurs is to break the final padded value into blocks of 512-bits, for our example this is fine as we only have one block, however for other examples you can simple consider that value is segmented into blocks of 512-bits and then each separate block is processed separately. Once SHA-1 has our 512-bit block, it converts it into 32 sixteen bit words, and apparently utilizes the formula W[i] = (W[i-3] XOR W[i-8] XOR W[i-14] XOR W[i-16]) <<< 1 (where <<< denotes left circular shift) for words 16 to 79. (I'm not going to delve too far here, or else I may just go insane.)

So following on from the process of splitting out the value into the relevant segments it processes each of the 80 words using different formula's throughout, where the above is used for words 16 to 79 and others are used for other words, it then goes into what is known as an "iterative processing" loop and updates the 5 variables previously mentioned using a slew of bitwise operations, logical functions, and modular additions.

Once it has completed this process it concatenates the values of the 5 variables together and thus we get the hashed value, in this case A9993E364706816ABA3E25717850C26C9CD0D89D.

A fairly decent process, with a number of iterations inside to slow it down, however it's quite a fast hashing algorithm, and most hashing algorithms are quite fast and therein lies one of their downfalls for password hashing but they have served their purpose historically, which was to make it hard to read passwords that are stored, there are a few qualities that are desirable within hashing algorithms and some of these are

- To be deterministic, as in, a value will always resolve to the same hash.

- They should be Fixed-length, in that, the hash they return should always be the same length. (see Length extension attacks)

- "Preimage resistance", this is the word used to say that it should be VERY expensive to find the original input, so much as to be considered computationally infeasible.

- "Collision resistance", which is a nice and fancy way of saying the odds of 2 values coming up with the same hash should be ridiculously low.

- And they should look to utilize the "Avalanche effect", which is to say, a small change in value, should result in a drastically different hash.

Now, I've shown off sha-1, but I haven't discussed the fact that it's now considered bad for cryptographic usages, due to vulnerabilities that have cropped up over the years but it still works as a nice example of how a hashing algorithm works. But having said that, I haven't even covered an important part of utilizing a hashing algorithm to hash your passwords, this is called a "salt", and very much like how we would "salt" something in reality, it is essentially appending a random string to the end of a password so that your final hash is not easily reproducible.

These are used to prevent something called "Rainbow table" attacks, which in essence are when someone computes every value with a specific hashing algorithm, and they usually include common passwords like "password" in them, which then gives them a list of all hashes that are generated by that specific algorithm, what does this mean? Well, this means that if an attacker gets hold of your hash that they can compare it with their little (read: very large) table of hashes and figure out what your password was before it was hashed because the algorithm is deterministic.

So the world came up with a salt, and the best practice is to generate a large, random string to be a salt, and specifically to calculate a new salt PER PASSWORD, so that if someone gains access to your database of hashed passwords (which typically store a salt in plaintext right next to the password) then they would have to create a rainbow table for every value with the salt appended, which is fine, in general terms, if someone has access to your database where the salt is stored, you've got bigger problems than them getting the passwords, as such your infrastructure and systems should be designed to be resilient to unauthorised access.

So why am I still writing instead of implementing, well, there's one more thing to discuss, and that is called a "Key derivation function" which are similar to hashing algorithms but they utilize different mechanisms to make it more robust and secure, they incorporate something called a "work factor" which is essentially a value to say how computationally expensive it should be to calculate the specific output value, this makes them more resistant to brute-force attacks than a hashing algorithm. For example in PBKDF2 (Password-Based Key Derivation Function 2), a widely used KDF, the work factor dictates the number of iterations the algorithm goes through and the higher the iteration count, the higher the computational cost.

So what we're actually going to be using is a KDF not a hashing algorithm. As such I intend to utilize bcrypt from this lovely looking little package.

And yes, it did essentially just take me around 2000 words to explain, I'm going to use bcrypt; and I am so not sorry for sharing all of that (what I hope is) juicy knowledge :)

But to recap very quickly, we will hash our passwords using bcrypt, which will make use of a work factor and a salt, the salt will be stored inside the database beside the password and will be stored in plaintext, while the password will be stored as a hash, both will be loaded from the database into the managed code (C#) to perform the computation as the C# layer is typically more scalable than the database layer (Especially when SQL Server per core licensing comes into the picture).

From my understanding and research this is considered a common and acceptable approach throughout the industry.

And now, finally, we will implement some code, so first of all, I'd like to approach the database structure, which initially, can be as simple as a table that stores the following information

- Id (IDENTITY INT)

- Username (nvarchar, length 40)

- PasswordHash (varchar, length 100) bcrypt supposedly has a character limit of 60, but just incase, I can afford another 40 per record. (I can also update it later if I need to, when I actually start writing to the table.)

This is realistically, all we actually need to store, everything else is fluff at this point, we don't have any 2FA, so we don't really need an email address, we don't need any personal information, we simply need an id, a username and a password (+security stuff). As such we have a fairly simple SQL script for this.

IF NOT EXISTS (SELECT 1 FROM INFORMATION_SCHEMA.TABLES WHERE TABLE_NAME = 'UserAccounts')

BEGIN

CREATE TABLE UserAccounts (

Id INT IDENTITY PRIMARY KEY,

Username NVARCHAR(40) NOT NULL

);

END;

As I intend for this script to be part of the initial "Installation" of my NuGet package, I have included a clause to only create it, if it does not already exist, this way, if I later add (or modify) new columns I can append a script to just add the single column to the table and place it in the same script and package consumers can just run the script whenever a change occurs to get the latest magic.

A very important note here is that, we don't store the salt or the work factor in their own columns, and you might be asking why, well the bcrypt package we use appends both of them onto the hashed password in a set format, so that all the information can be stored and accessed in a single string, rather than having to worry about ensuring storage mechanisms for it all separately, as I guess, there's really no need to worry, because they're only ever going to be used alongside the password anyway!

So now that we have our initial DB table, I need to look at how we map this data from the database into a model using MapDataReader, which I'll start by defining a BaseDbModel or BaseEntity which will define the required Id column which I'm going to enforce as a standard on all of my data, this has a nice side-effect of enabling very easy generic method and class creation, although I may not require much in the form of generics when it comes to interacting with my database due to the nature of using a source code generator over something like EF.

namespace Expedition.Auth.DbModels;

public abstract class BaseDbModel

{

public int Id { get; set; }

}

Now that I have defined this base model, we can use it to define a new UserAccountModel which will contain the same columns as my UserAccounts table, and not forgetting to add my GenerateDataReaderMapper which will enable the auto-generation of my "ORM" code to occur, noting also that we require a constructor due to my usage of the required keyword.

using MapDataReader;

namespace Expedition.Auth.DbModels;

[GenerateDataReaderMapper]

public class UserAccountModel(string username, string passwordHash)

{

public required string Username { get; set; } = username;

public required string PasswordHash { get; set; } = passwordHash;

}

With the generated code looking like this

// <auto-generated/>

#pragma warning disable 8019 //disable 'unnecessary using directive' warning

using System;

using System.Data;

using System.Linq;

using System.Collections.Generic; //to support List<T> etc

namespace MapDataReader {

public static partial class MapperExtensions {

public static void SetPropertyByName(this global::Expedition.Auth.DbModels.UserAccountModel target, string name, object value) {

SetPropertyByUpperName(target, name.ToUpperInvariant(), value);

}

private static void SetPropertyByUpperName(this global::Expedition.Auth.DbModels.UserAccountModel target, string name, object value) {

if (name == "USERNAME") {

target.Username = value as string;

return;

}

if (name == "PASSWORDHASH") {

target.PasswordHash = value as string;

return;

}

} //end method

}

}

Now obviously there's some stranger stuff going on in here, but it's scaffolded out the code, so I don't have to write it, wonderful! Obviously this is no comparison to something like EF which actually generates your SQL code, but I prefer a more transparent and fine-grained control over my SQL. Now the disadvantage here is that I need to manage my SQL connections myself and handle all the annoying stuff that we've all become so used to dealing with, So I'll create some kind of simple abstraction layer which will handle all of this for me going forward. Remember, if it can be made re-usable, you probably should!

using System.Data;

using System.Data.SqlClient;

namespace Expedition.Auth.Data;

public class SqlExecutor : IDisposable

{

private readonly string _connectionString;

public SqlExecutor(string connectionString)

{

_connectionString = connectionString;

}

public async Task<int> ExecuteNonQueryAsync(string query, params SqlParameter[]? parameters)

{

await using var connection = new SqlConnection(_connectionString);

await connection.OpenAsync();

await using var command = new SqlCommand(query, connection);

if (parameters != null)

{

command.Parameters.AddRange(parameters);

}

return await command.ExecuteNonQueryAsync();

}

public async Task<object> ExecuteScalarAsync(string query, params SqlParameter[]? parameters)

{

await using var connection = new SqlConnection(_connectionString);

await connection.OpenAsync();

await using var command = new SqlCommand(query, connection);

if (parameters != null)

{

command.Parameters.AddRange(parameters);

}

return await command.ExecuteScalarAsync() ?? throw new InvalidOperationException();

}

public async Task<IDataReader> ExecuteQueryAsync(string query, params SqlParameter[]? parameters)

{

var connection = new SqlConnection(_connectionString);

await connection.OpenAsync();

var command = new SqlCommand(query, connection);

if (parameters != null)

{

command.Parameters.AddRange(parameters);

}

var reader = await command.ExecuteReaderAsync(CommandBehavior.CloseConnection);

return reader;

}

public async Task<IDataReader> ExecuteReaderAsync(string query, params SqlParameter[]? parameters)

{

var connection = new SqlConnection(_connectionString);

await connection.OpenAsync();

var command = new SqlCommand(query, connection);

if (parameters != null)

{

command.Parameters.AddRange(parameters);

}

var reader = await command.ExecuteReaderAsync(CommandBehavior.CloseConnection);

return reader;

}

}

So we've got some nice stuff here, all our queries will be parameterised (well, the mechanism is here for it, and we should ensure we do it) and a number of useful methods we can utilize, importantly we're using the IDataReader where we can, so that we can utilize our MapDataReader generated code and as everything is disposed of nicely by the framework due to our usage of using statements we don't even need to worry about a dispose method!

So, the elephant in the room here is that every time we want to use this, we have to new() it up, instead of utilizing it with DI. So what we'll do, is we'll create an interface for this SqlExecutor so that it can be registered within DI, and then provide a nice pre-made extension method that will enable users to pass in their connection string when they define the DI registration itself, enabling them to use whatever configuration method they like, be it appsettings.json or environment variables, or well... anything! So we implement the following -

using System.Data;

using System.Data.SqlClient;

namespace Expedition.Auth.Data;

public interface ISqlExecutor

{

Task<int> ExecuteNonQueryAsync(string query, params SqlParameter[]? parameters);

Task<object> ExecuteScalarAsync(string query, params SqlParameter[]? parameters);

Task<IDataReader> ExecuteQueryAsync(string query, params SqlParameter[]? parameters);

Task<IDataReader> ExecuteReaderAsync(string query, params SqlParameter[]? parameters);

}

using System.Data;

using System.Data.SqlClient;

namespace Expedition.Auth.Data;

public class SqlExecutor : ISqlExecutor

{

//...omitted for brevity as included in previous code snippet

}

using Expedition.Auth.Data;

using Microsoft.Extensions.DependencyInjection;

namespace Expedition.Auth.DependencyInjection;

public static class SqlExecutorServiceCollectionExtensions

{

public static IServiceCollection AddSqlExecutor(this IServiceCollection services, string connectionString)

{

services.AddScoped<ISqlExecutor>(_ => new SqlExecutor(connectionString));

return services;

}

}

So this now enables any consumer to very easily register our SqlExecutor, although later on we'll be looking at how we will abstract this away from them enabling them to register it for use within our class library by making it internal and providing an overarching RegisterExpeditionAuth(connString) method, in fact, most of our stuff will be internal as it represents the internal processes of our library, and will expose only the methods required to work with it.

So now we have our SqlExecutor in place we can begin to look at how we might work towards building the code out to enable us to create a new user! As such we're going to build out an IAuthenticationService and it's relevant implementation, this will be one of the only parts of our library that people will be able to actually interact with, at least initially.

namespace Expedition.Auth.Services;

public interface IAuthenticationService

{

Task<bool> RegisterUserAsync(string username, string password);

Task<bool> ValidateUserAsync(string username, string password);

Task<bool> UpdatePasswordAsync(string username, string existingPassword, string newPassword);

}

While we've defined three methods here, We're only really concerned with the RegisterUserAsync initially, we can internally validate using SSMS (or other similar sql ide) that our records are correctly being inserted, but what I really want to do is take advantage of this to make a simple stored procedure to do the insert, this allows us a more fine-grained control over who can actually read the underlying tables, by keeping the app at "arms length" as it were and only giving it the permissions it actually needs, and in this case, preventing it from accessing anything but what is allowed, meaning that it makes it significantly more difficult to breach the underlying tables.

IF NOT EXISTS (SELECT * FROM sys.objects WHERE type = 'P' AND name = 'RegisterUser')

BEGIN

EXEC('CREATE PROCEDURE dbo.RegisterUser AS BEGIN RETURN END;');

END

GO

ALTER PROCEDURE dbo.RegisterUser

@Username NVARCHAR(40),

@PasswordHash VARCHAR (100)

AS

BEGIN

IF NOT EXISTS (SELECT 1 FROM UserAccounts WHERE Username = @Username)

BEGIN

INSERT INTO UserAccounts (Username, PasswordHash)

VALUES (@Username, @PasswordHash);

SELECT 1 AS Success; -- Indicate successful registration

END

ELSE

BEGIN

SELECT 0 AS Success; -- Indicate username already exists

END

END

So now that we have our stored procedure we can implement the C# code required to call it within the AuthenticationService.RegisterUserAsync method.

using System.Data;

using System.Data.SqlClient;

using Expedition.Auth.Data;

namespace Expedition.Auth.Services;

public class AuthenticationService(ISqlExecutor sqlExecutor) : IAuthenticationService

{

public async Task<bool> RegisterUserAsync(string username, string password)

{

//11 WF is actually the default, but we can look to provide a config based setup so consumers can select their own.

var hashedPassword = BCrypt.Net.BCrypt.HashPassword(password, 11);

if (hashedPassword == null) throw new InvalidOperationException("This password could not be hashed");

var registrationResult = await CallRegisterUserStoredProcedure(username, hashedPassword);

return registrationResult == DatabaseConstants.RegisterUserResultCode.UserCreated;

}

private async Task<DatabaseConstants.RegisterUserResultCode> CallRegisterUserStoredProcedure(string username, string hashedPassword)

{

// Create parameters

var usernameParam = new SqlParameter("@Username", SqlDbType.NVarChar) { Value = username };

var passwordHashParam = new SqlParameter("@PasswordHash", SqlDbType.NVarChar) { Value = hashedPassword };

var returnVal = await sqlExecutor.ExecuteScalarAsync(DatabaseConstants.StoredProcedures.RegisterUserCommand, usernameParam,

passwordHashParam);

if (returnVal is not int) throw new InvalidOperationException("The database response was not a valid int.");

return (DatabaseConstants.RegisterUserResultCode) returnVal;

}

public Task<bool> ValidateUserAsync(string username, string password)

{

throw new NotImplementedException();

}

public Task<bool> UpdatePasswordAsync(string username, string existingPassword, string newPassword)

{

throw new NotImplementedException();

}

}

As you can see there's some nice stuff going on here, first of all, we hash the incoming password and if for some reason a null is returned from the bcrypt library, we throw an InvalidOperationException, unfortunately, In this scenario we have no actual information from the base library to provide the consumer so we give them a generic message that lets them know as much as we know. After that we make our call to the stored procedure, I have opted to split this out into it's own little private method for the sake of readability and maintaining small, nice units of code, which we can later provide unit tests for, within this new method we have some additional error handling around unexpected responses again but (I believe) it's unlikely to ever occur, due to our control over the underlying responses.

You'll notice I've added some some DatabaseConstants and these are essentially strings and enums that provide us a cleaner DX (Developer experience) as we're working, here's the current DatabaseConstants.cs which just ensures that a) we have an easy way of identifying what the responses from our Stored procedures are (If they are a single integer) and that we can easily re-use the sql strings throughout and reduce the risk of typos etc.

namespace Expedition.Auth.Data;

public static class DatabaseConstants

{

public static class StoredProcedures

{

public const string RegisterUserCommand = "EXEC RegisterUser @Username, @PasswordHash";

}

public enum RegisterUserResultCode {

UserAlreadyExists = 0,

UserCreated = 1

}

}

And if this file starts to get unwieldy later on, we can very easily separate out different parts into their own files, but we can maintain it as a single file for now, as the scope is likely to remain quite small.

Okay, now that we have an initial implementation that we need to test, we can use the API that we setup earlier to actually trigger the RegisterUserAsync and ensure the implementation is correct, I'd LOVE to automate this, which I'll do as an "Integration test" later on, which will utilize some nice packages to spin up relevant docker containers etc while also setting up some unit testing for quicker feedback testing cycles.

So, first of all, we'll add some code into the API that will at least enable us to use swagger to trigger the code within our class library and then we can verify that it's working etc. So first of all, I'll scrap all the minimal API nonsense that the projects come setup with, (I really don't see what MSFT was aiming for here...) and create an AuthenticationController which will be our little testing rig as well as adding our call to the packages DependencyInjection helpers.

AuthenticationController.cs

using Expedition.Auth.Services;

using Microsoft.AspNetCore.Mvc;

namespace ExpeditionAuth.API.Controllers;

[ApiController]

public class AuthenticationController : ControllerBase

{

private readonly IAuthenticationService _authenticationService;

public AuthenticationController(IAuthenticationService authenticationService)

{

_authenticationService = authenticationService;

}

public class RegisterUserRequest

{

public required string Username { get; set; }

public required string Password { get; set; }

}

[HttpPost]

public async Task<IActionResult> Register(RegisterUserRequest request)

{

if (await _authenticationService.RegisterUserAsync(request.Username, request.Password)) return Ok();

return StatusCode(500);

}

}

Program.cs

using Expedition.Auth.DependencyInjection;

namespace ExpeditionAuth.API;

public class Program

{

public static void Main(string[] args)

{

var builder = WebApplication.CreateBuilder(args);

// Add services to the container.

builder.Services.AddAuthorization();

// Learn more about configuring Swagger/OpenAPI at https://aka.ms/aspnetcore/swashbuckle

builder.Services.AddEndpointsApiExplorer();

builder.Services.AddSwaggerGen();

builder.Services.AddControllers();

//Add a simple connection string.

builder.Services.AddExpeditionAuth("Server=(local);Database=Expedition;Trusted_Connection=True;");

var app = builder.Build();

// Configure the HTTP request pipeline.

if (app.Environment.IsDevelopment())

{

app.UseSwagger();

app.UseSwaggerUI();

}

app.UseRouting();

// app.UseHttpsRedirection();

app.UseAuthorization();

app.MapControllerRoute(name: "default",

pattern: "{controller=Home}/{action=Index}/{id?}");

app.Run();

}

}

As you can see, I've taken the liberty of adding a basic connection string in place as a hardcoded value for now, obviously in practice we really should never do this. But for a little test, I think we're okay for the moment! So the one part I've realised that I missed was adding some DI helpers for the AuthenticationService which means that the controller will be unable to resolve it as a dependency, so our next step is to add an additional DI helper to deal with this, I'll actually wrap the SqlExecutor call up inside it as well, so that we can have a single DI call from program.cs so that anyone who utilizes the package will only need to call a single method for connecting up. So our DI Helper ends up looking more like this

using Expedition.Auth.Data;

using Expedition.Auth.Services;

using Microsoft.Extensions.DependencyInjection;

namespace Expedition.Auth.DependencyInjection;

public static class ServiceCollectionExtensions

{

private static IServiceCollection AddSqlExecutor(this IServiceCollection services, string connectionString)

{

services.AddScoped<ISqlExecutor>(_ => new SqlExecutor(connectionString));

return services;

}

public static IServiceCollection AddExpeditionAuth(this IServiceCollection services, string connectionString)

{

services.AddSqlExecutor(connectionString);

services.AddScoped<IAuthenticationService, AuthenticationService>();

return services;

}

}

Now this means that the provided IServiceCollection suggestions will never be able to prompt a user to utilize the AddSqlExecutor method, and we've nicely wrapped it all up in a bow, and obviously any new DI we need, we just register inside the AddExpeditionAuth method and the calling consumer will instantly have it included as long as they call the AddExpeditionAuth method on their DI container like so

builder.Services.AddExpeditionAuth("Server=(local);Database=Expedition;Trusted_Connection=True;");

Now that we have that out of the way, we can run our API app and we should be able to interact via the default swagger interface that's included in the .NET API.

fail: Microsoft.AspNetCore.Diagnostics.DeveloperExceptionPageMiddleware[1]

An unhandled exception has occurred while executing the request.

System.InvalidOperationException: Action 'ExpeditionAuth.API.Controllers.AuthenticationController.Register (ExpeditionAuth.API)' does not have an attribute route. Action methods on controllers annotated with ApiControllerAttribute must be attribute routed.

at....

Whoops! Guess I forgot to add a route to the controller method! What I'll do here is assign a route to the controller and the method individually, so that the system will group any endpoints by controller nicely in swagger.

using Expedition.Auth.Services;

using Microsoft.AspNetCore.Mvc;

namespace ExpeditionAuth.API.Controllers;

[ApiController]

[Route("[controller]")]

public class AuthenticationController : ControllerBase

{

private readonly AuthenticationService _authenticationService;

public AuthenticationController(AuthenticationService authenticationService)

{

_authenticationService = authenticationService;

}

public class RegisterUserRequest

{

public required string Username { get; set; }

public required string Password { get; set; }

}

[HttpPost]

[Route("Register")]

public async Task<IActionResult> Register(RegisterUserRequest request)

{

if (await _authenticationService.RegisterUserAsync(request.Username, request.Password)) return Ok();

return StatusCode(500);

}

}

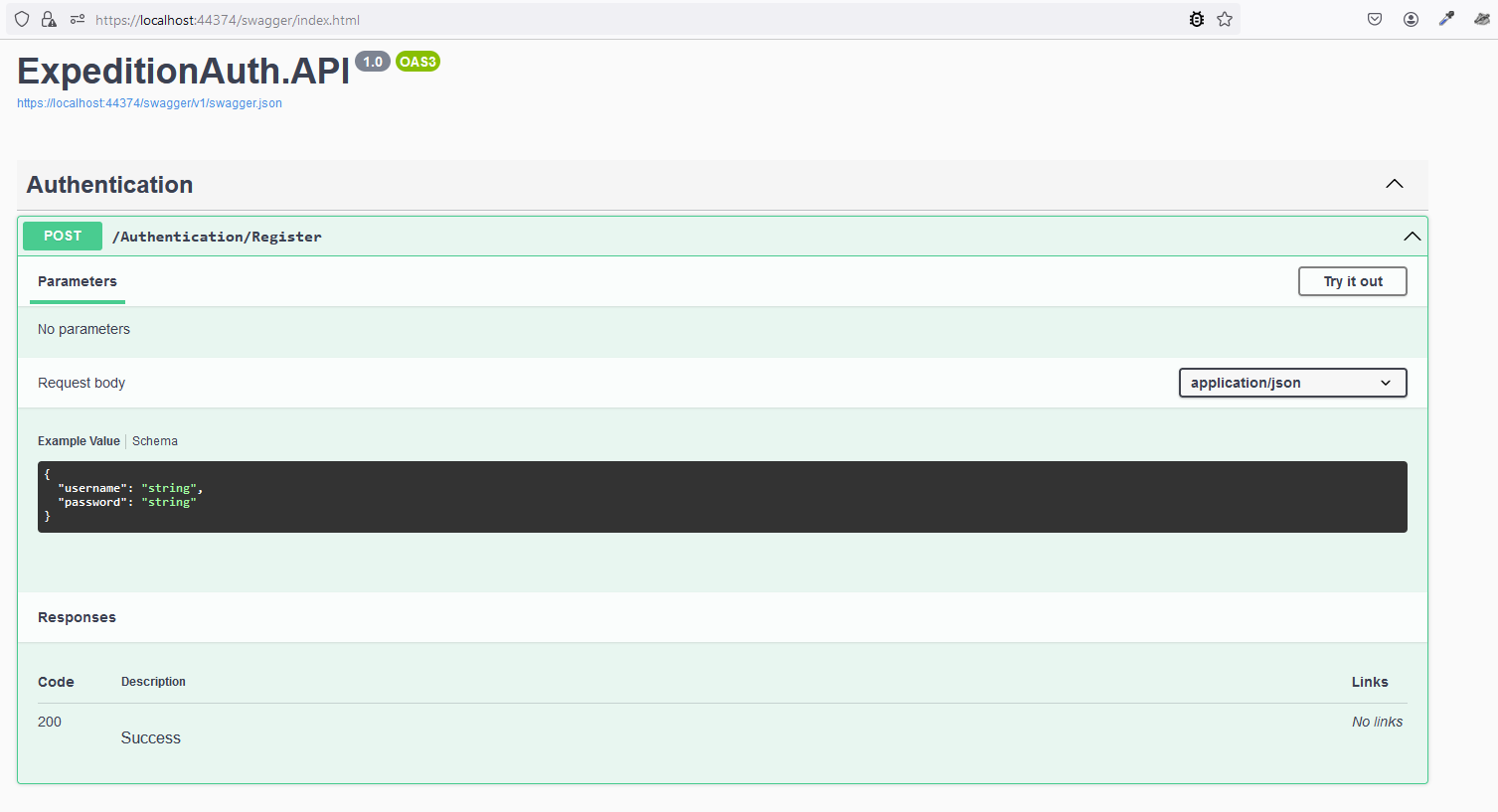

And now that we have all of that sorted, we get to see our lovely swagger FE allowing us to make our call to the API.

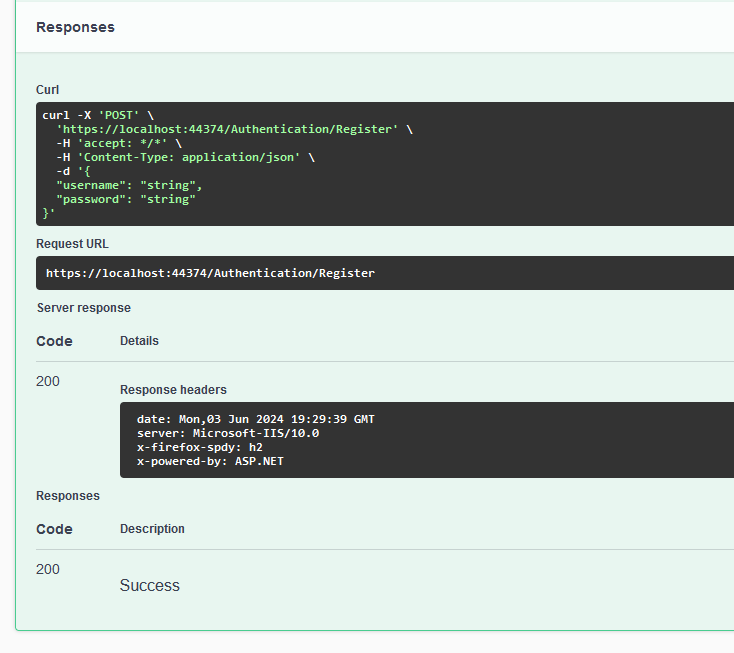

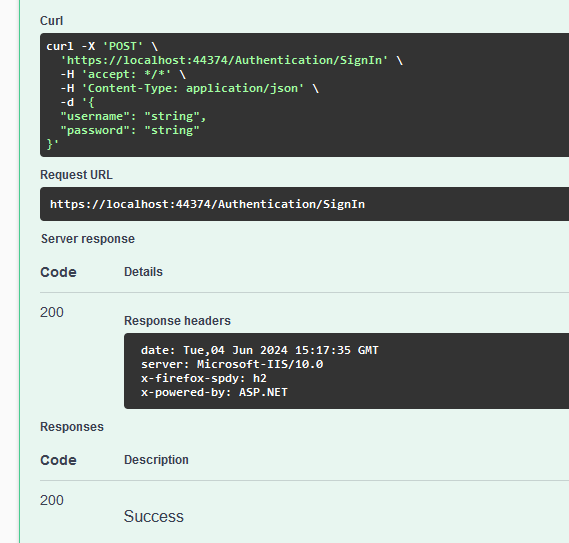

So now, I can make a nice call with some fairly arbitrary values to test my implementation is working as desired

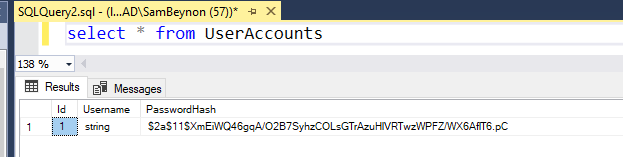

As described within our code, we receive a 200 "OK" result indicating that the user account has been successfully registered within our system, for the moment, all we can do is verify this utilizing a SQL client, as such a quick SELECT * from UserAccounts should give use the information we need to confirm that the user was indeed created and we are ready to move onto our next step of verifying that it's correct as our actual username is "string" and our unhashed password is also the value "string" we'll be able to verify against that.

As you can see, we have a user with the correct username, and a password we cannot read which is exactly 60 characters long, which means we're good to go and our schema has been built correctly to handle this. The next step is to implement our VerifyUserAsync method after which we can build out some integration tests so that these flows can be validated in the future very easily. As such, in keeping with our established patterns, I will crate a stored procedure to retrieve the details of a specific user from the database.

IF NOT EXISTS (SELECT * FROM sys.objects WHERE type = 'P' AND name = 'GetUserByUsername')

BEGIN

EXEC('CREATE PROCEDURE dbo.GetUserByUsername AS BEGIN RETURN END;');

END

GO

ALTER PROCEDURE dbo.GetUserByUsername

@Username NVARCHAR(40)

AS

BEGIN

SELECT

Id,

Username,

PasswordHash

FROM UserAccounts

WHERE Username = @Username

END

GO

Now that I have the relevant stored procedure to call, I can built out the C# code required to interact with it, which finally includes usage of the MapDataReader library!

It was at this point that I realised the MapDataReader library wasn't going to work for me, because it doesn't support .NET 8 fully, it did not create the relevant MapTo() extensionMethods that were expected, therefore I have downgraded the project to .NET 6 for the moment and plan to create a PR on the MapDataReader repo to add this support and we'll migrate back to .Net 8 at that point.

public async Task<ValidateUserResult> ValidateUserAsync(string username, string password)

{

var user = await CallGetUserByUsernameProcedure(username);

if (user == null) return new ValidateUserResult(ValidateUserResultCode.NoMatchingUser);

var passwordMatches = BCrypt.Net.BCrypt.Verify(password, user.PasswordHash);

if(!passwordMatches) return new ValidateUserResult(ValidateUserResultCode.InvalidPassword);

return new ValidateUserResult(ValidateUserResultCode.Success, user);

}

private async Task<UserAccountModel?> CallGetUserByUsernameProcedure(string username)

{

var usernameParam = new SqlParameter("@Username", SqlDbType.NVarChar) { Value = username };

var returnVal = await _sqlExecutor.ExecuteReaderAsync(

DatabaseConstants.StoredProcedures.GetUserByUsernameCommand,

usernameParam);

var userList = returnVal.ToUserAccountModel();

return !userList.Any() ? null : userList.SingleOrDefault();

}

using Expedition.Auth.DbModels;

namespace Expedition.Auth.Results;

public class ValidateUserResult

{

public ValidateUserResult(ValidateUserResultCode resultCode)

{

ResultCode = resultCode;

}

public ValidateUserResult(ValidateUserResultCode resultCode, UserAccountModel user) :this(resultCode)

{

User = user;

}

public UserAccountModel? User { get; set; }

public ValidateUserResultCode ResultCode { get; set; }

}

public enum ValidateUserResultCode

{

Success,

NoMatchingUser,

InvalidPassword

}

namespace Expedition.Auth.Data;

public static class DatabaseConstants

{

public static class StoredProcedures

{

public const string RegisterUserCommand = "EXEC RegisterUser @Username, @PasswordHash";

public const string GetUserByUsernameCommand = "EXEC GetUserByUsername @Username";

}

public enum RegisterUserResultCode {

UserAlreadyExists = 0,

UserCreated = 1

}

}

As you can see, I've introduced a new pattern here, which enables us to report different states to the service's consumer, this is referred to as the "Result" pattern and is very useful when you have a scenario that can end in multiple ways, such as no user matching what you searched for, or the password not matching the hashed one we have stored. This then enables the consumer to handle these results however they like very easily and by providing an enum, rather than a "code" we wrap it up nicely so that it remains typed and provides a nice DX.

We've also added a new constant to enable us easy access to the SQL string required to call the relevant stored procedure, as well as a new private method that handles the actual DB response & calling of the ISqlExecutor so we're free to handle the "business logic" within the main VerifyUserAsync method.

Thankfully, it's fairly simple to do all of this, and there isn't too much complication, I may however opt to move the private methods into a new internal adapter/service/repository later so that the implementations can be easily switched should the need arise! As well as providing us with the ability to potentially mock/fake the calls so that we can unit test nicely.

But now that we have the ValidateUserAsync implemented, we need to test that it works, so we'll add a new SignIn method to our controller and run a few scenarios.

[HttpPost]

[Route("SignIn")]

public async Task<IActionResult> SignIn(RegisterUserRequest request)

{

var signInResult = await _authenticationService.ValidateUserAsync(request.Username, request.Password);

return signInResult.ResultCode switch

{

ValidateUserResultCode.Success => Ok(),

ValidateUserResultCode.NoMatchingUser => Unauthorized("Username was incorrect"),

ValidateUserResultCode.InvalidPassword => Unauthorized("Password was incorrect."),

_ => throw new ArgumentOutOfRangeException()

};

}

You'll notice here, that I currently re-using the RegisterUserRequest and that's because I'm affording myself the luxury of being lazy and as the API isn't going to be built out as a full system, I can happily let myself slip on a few standards here, as the data structure is the same between Register and SignIn anyway. (at least... currently!)

But now we can test a quick initial scenario and then we'll look at adding integration tests to make it more convenient to test and re-test our scenario's to ensure that we don't break any expected behaviours.

As can be seen, we have successfully validated our username/password! HUZZAH! So now, how do we make this an automatable test so that we don't have to run swagger and manually input data every time we make a change to ensure we haven't broken stuff, Getting these tests in earlier will ensure that we can start with a process of quality assurance and automated regression testing quite nicely going forward as the "framework" to add new tests will be in place already.

As such, we'll add a new testing project to our solution, and install the Microsoft.AspNetCore.Mvc.Testing NuGet package which will provide us with a number of nice utilities to spin up an in-memory API and crack on as needed! Specifically we'll be utilising the WebApplicationFactory<TEntryPoint> class that is used to spawn the test server instance in memory. Which means I don't have to run the API manually every time to run the tests.

The beauty is, we don't even need to worry too much here, we can simply "stick it in" the test method and go. Like so

using System.Text;

using System.Text.Json;

using ExpeditionAuth.API;

using ExpeditionAuth.API.Controllers;

using Microsoft.AspNetCore.Mvc.Testing;

namespace ExpeditionAuth.Api.Tests;

public class AuthenticationControllerTests

{

[Fact]

public async Task RegisterUser_Creates_New_User()

{

await using var application = new WebApplicationFactory<Program>();

using var client = application.CreateClient();

var dataObject = new AuthenticationController.RegisterUserRequest()

{ Username = "your_username", Password = "your_password" };

// Serialize the object to JSON string

var json = JsonSerializer.Serialize(dataObject);

// Create JsonContent with appropriate media type

var content = new StringContent(json, Encoding.UTF8, "application/json");

var response = await client.PostAsync("/Authentication/Register",content );

Assert.True(response.IsSuccessStatusCode);

}

}

Now there are some issues here, one obvious glaring issue is that we have no way to override which database the integration test system is using, so the test is likely to fail the second time around as we are using a non-transient database (my local dev database). So the first thing we need to do is create our own customer WebApplicationFactory as this will enable us to override DI to pass in a connection string for a different database than our main one.

I should probably mention here, that i'm using XUnit for these tests, other testing frameworks may differ

using Expedition.Auth.DependencyInjection;

using ExpeditionAuth.API;

using Microsoft.AspNetCore.Hosting;

using Microsoft.AspNetCore.Mvc.Testing;

using Microsoft.AspNetCore.TestHost;

namespace ExpeditionAuth.Api.Tests;

public class ApiWebApplicationFactory : WebApplicationFactory<Program>

{

protected override void ConfigureWebHost(IWebHostBuilder builder)

{

builder.ConfigureTestServices(s =>

{

s.AddExpeditionAuth("");

});

}

}

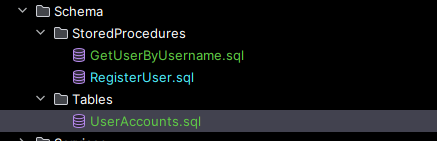

Now that we have this, we need to come up with a nice way of setting this up, and my preferred approach here is to use a package called TestContainers which will spin up a new instance of a docker container running SQL and provide us a connection string, this ensure that the Database is newly created every time and that we have a "clean slate" to perform our tests on. Of course, one of the bigger issues here, is that we need to build out the database every time we spawn the DB, so we'll need to address creating our install.sql script and finding a way to run that on the DB's after they are ready for use but before we run our tests. So we'll address creating that script first, as you may remember, we have all of our schema files stored in our solution.

So now we can install TestContainers (and the MSSQL test container package) and spin up a db within our custom WebAppFactory and pass the connectionstring through to the overridden DI using ConfigureTestServices

using Expedition.Auth.DependencyInjection;

using ExpeditionAuth.API;

using Microsoft.AspNetCore.Hosting;

using Microsoft.AspNetCore.Mvc.Testing;

using Microsoft.AspNetCore.TestHost;

using Testcontainers.MsSql;

namespace ExpeditionAuth.Api.Tests;

public class ApiWebApplicationFactory : WebApplicationFactory<Program>

{

private readonly MsSqlContainer _db;

public ApiWebApplicationFactory()

{

_db = new MsSqlBuilder().Build();

_db.StartAsync().Wait();

}

protected override void ConfigureWebHost(IWebHostBuilder builder)

{

builder.ConfigureTestServices(s =>

{

s.AddExpeditionAuth(_db.GetConnectionString());

});

}

protected override void Dispose(bool disposing)

{

if (disposing)

{

_db.StopAsync().Wait();

_db.DisposeAsync().GetAwaiter().GetResult();

}

base.Dispose(disposing);

}

}

public class AuthenticationControllerTests: IClassFixture<ApiWebApplicationFactory>

{

private readonly HttpClient _client;

public AuthenticationControllerTests(ApiWebApplicationFactory fac)

{

_client = fac.CreateClient();

}

[Fact]

public async Task RegisterUser_Creates_New_User()

{

var dataObject = new AuthenticationController.RegisterUserRequest()

{ Username = "your_username", Password = "your_password" };

// Serialize the object to JSON string

var json = JsonSerializer.Serialize(dataObject);

// Create JsonContent with appropriate media type

var content = new StringContent(json, Encoding.UTF8, "application/json");

var response = await _client.PostAsync("/Authentication/Register",content );

Assert.True(response.IsSuccessStatusCode);

}

}

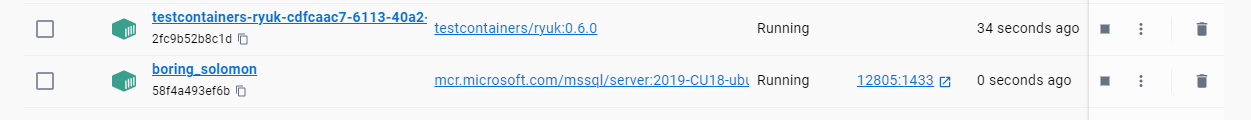

And now if I debug through into my ApiWebAppFactory, to the state that it is in after the container has been started, we can see it in our Docker Desktop

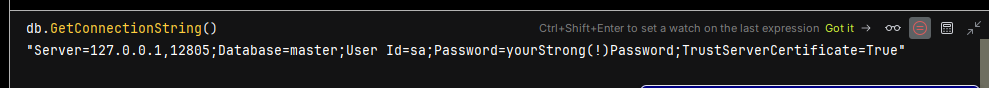

Now we know that this has correctly been built up and provisioned, and sure enough, when accessing the connection string in a debug context, we get a nice connection string with everything we need, which can then be passed through to the DI as is displayed above.

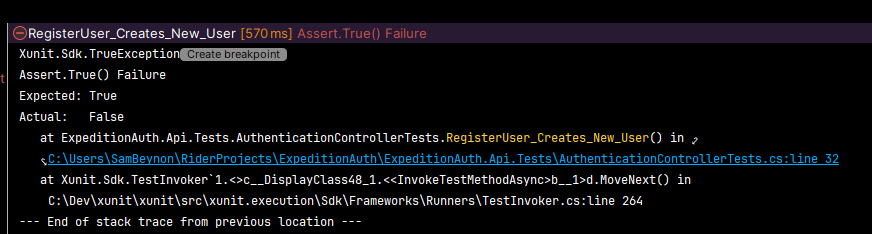

So now that we have that, we can let the tests run, knowing that they'll fail because the db is a blank slate, but we should receive an exception when it attempts to run a stored procedure.

However, the test does not give us quite the information we require to determine this straight off the bat, the only reason we're aware is because we know we haven't set the database up properly yet, but debugging through will likely provide us with better information.

So now we have that sorted, we should come up with some way of processing the saved scripts within our schema files against this new transient database so that we have all our tables and stored procs available to us, enabling us to build out our functional/integration testing environment nicely and so I implemented the following

using System.Data.SqlClient;

using Expedition.Auth.DependencyInjection;

using ExpeditionAuth.API;

using Microsoft.AspNetCore.Hosting;

using Microsoft.AspNetCore.Mvc.Testing;

using Microsoft.AspNetCore.TestHost;

using Testcontainers.MsSql;

namespace ExpeditionAuth.Api.Tests;

public class ApiWebApplicationFactory : WebApplicationFactory<Program>

{

private readonly MsSqlContainer _db;

public ApiWebApplicationFactory()

{

_db = new MsSqlBuilder().Build();

_db.StartAsync().Wait();

InitializeDatabase().GetAwaiter().GetResult();

}

protected override void ConfigureWebHost(IWebHostBuilder builder)

{

builder.ConfigureTestServices(s =>

{

s.AddExpeditionAuth(_db.GetConnectionString());

});

}

private async Task InitializeDatabase()

{

await using var connection = new SqlConnection(_db.GetConnectionString());

await connection.OpenAsync();

var baseDir = @"..\..\..\..\Expedition.Auth\Schema";

var files = new List<string>

{

$@"{baseDir}\Tables\UserAccounts.sql",

$@"{baseDir}\StoredProcedures\RegisterUser.sql",

$@"{baseDir}\StoredProcedures\GetUserByUsername.sql"

};

foreach (var file in files)

{

var fullScript = await File.ReadAllTextAsync(file);

//we should split these on any "GO" commands as they cannot be included in SqlCommands

var scripts = fullScript.Split("GO");

foreach (var script in scripts)

{

await using var command = new SqlCommand(script, connection);

await command.ExecuteNonQueryAsync();

}

}

}

protected override void Dispose(bool disposing)

{

if (disposing)

{

_db.StopAsync().Wait();

_db.DisposeAsync().GetAwaiter().GetResult();

}

base.Dispose(disposing);

}

}

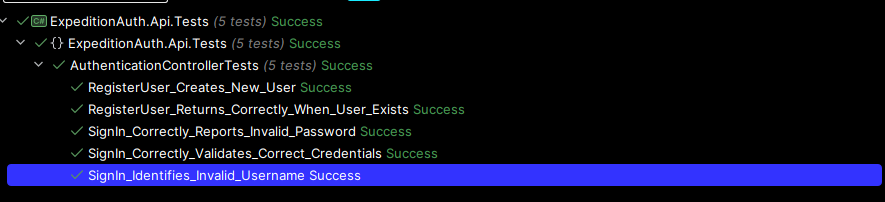

Take notice of the InitializeDatabase method, this is where the magic happens, although I would prefer to come up with something a little easier to maintain, for now this will handle everything I need when building out my blank slate DB, and as can be seen from my "Unit tests" window, it has now marked the test as successful.

Which means I now have a working, automated functional testing rig, that works off an actual database that is running in docker and is specific to each run of the tests so anything left over is automatically torn down and and we start with a fresh, blank slate after for every test run.

So now that's in place, I can add a few extra scenario's for assurance throughout both of my methods.

using System.Net;

using System.Text;

using System.Text.Json;

using ExpeditionAuth.API;

using ExpeditionAuth.API.Controllers;

using Microsoft.AspNetCore.Mvc.Testing;

namespace ExpeditionAuth.Api.Tests;

public class AuthenticationControllerTests: IClassFixture<ApiWebApplicationFactory>

{

private readonly HttpClient _client;

public AuthenticationControllerTests(ApiWebApplicationFactory fac)

{

_client = fac.CreateClient();

}

[Fact]

public async Task RegisterUser_Creates_New_User()

{

var dataObject = new AuthenticationController.RegisterUserRequest()

{ Username = "your_username", Password = "your_password" };

var json = JsonSerializer.Serialize(dataObject);

var content = new StringContent(json, Encoding.UTF8, "application/json");

var response = await _client.PostAsync("/Authentication/Register",content );

Assert.True(response.IsSuccessStatusCode);

}

[Fact]

public async Task RegisterUser_Returns_Correctly_When_User_Exists()

{

var username = Guid.NewGuid().ToString();

var dataObject = new AuthenticationController.RegisterUserRequest { Username = username, Password = "your_password" };

var json = JsonSerializer.Serialize(dataObject);

var content = new StringContent(json, Encoding.UTF8, "application/json");

var firstResponse = await _client.PostAsync("/Authentication/Register",content );

var secondResponse = await _client.PostAsync("/Authentication/Register",content );

Assert.True(firstResponse.IsSuccessStatusCode &&

!secondResponse.IsSuccessStatusCode);

}

[Fact]

public async Task SignIn_Correctly_Validates_Correct_Credentials()

{

var username = Guid.NewGuid().ToString();

var dataObject = new AuthenticationController.RegisterUserRequest { Username = username, Password = "your_password" };

var json = JsonSerializer.Serialize(dataObject);

var content = new StringContent(json, Encoding.UTF8, "application/json");

var firstResponse = await _client.PostAsync("/Authentication/Register",content );

var secondResponse = await _client.PostAsync("/Authentication/SignIn",content );

Assert.True(firstResponse.IsSuccessStatusCode &&

secondResponse.IsSuccessStatusCode);

}

[Fact]

public async Task SignIn_Identifies_Invalid_Username()

{

var username = Guid.NewGuid().ToString();

var dataObject = new AuthenticationController.RegisterUserRequest { Username = username, Password = "your_password" };

var json = JsonSerializer.Serialize(dataObject);

var content = new StringContent(json, Encoding.UTF8, "application/json");

var response = await _client.PostAsync("/Authentication/SignIn",content );

var message = await response.Content.ReadAsStringAsync();

Assert.True(response.StatusCode == HttpStatusCode.Unauthorized &&

message == "Username was incorrect");

}

[Fact]

public async Task SignIn_Correctly_Reports_Invalid_Password()

{

var username = Guid.NewGuid().ToString();

var dataObject = new AuthenticationController.RegisterUserRequest { Username = username, Password = "your_password" };

var json = JsonSerializer.Serialize(dataObject);

var content = new StringContent(json, Encoding.UTF8, "application/json");

await _client.PostAsync("/Authentication/Register",content );

dataObject.Password = "Test";

json = JsonSerializer.Serialize(dataObject);

content = new StringContent(json, Encoding.UTF8, "application/json");

var secondResponse = await _client.PostAsync("/Authentication/SignIn",content );

var message = await secondResponse.Content.ReadAsStringAsync();

Assert.True(secondResponse.StatusCode == HttpStatusCode.Unauthorized &&

message == "Password was incorrect");

}

}

So now I'm essentially covered when it comes to these scenario's, I can run these tests at will and ensure that my functionality is working as intended, and if I happen to break them, then I have the benefit of these tests telling me, this enables me to ensure any refactoring does not negatively affect the existing functionality, as well as ensuring the code continues to run as expected even after adding new features throughout that may tap into some of the same shared underlying methods; but on top of that, it provides me a quick and easy way to test my functionality without having to run my API every time and input new data, as well as the wonderful advantage of being able to run these tests on any build agents in CI/CD pipelines to ensure that I don't accidentally push any breaking changes to my package. (We'll address this at some stage.)

As such, I believe my next step will be to link into the [Authorize] attribute that is provided by the .NET framework and start looking at session management between different types of applications such as Razor based MVC applications and my current WebApi; but I think this first part is now ready for the world and there's enough to digest for the moment.