Custom rolling authentication in .NET for fun! Part 2

2024-06-12

So, this is part 2 of my series for building Authentication in Dotnet, in part 1 I shared some insights into how password hashing works and then went ahead and used a nice pre-built library to implement it (Because, quite frankly; I'm not that smart! - Yet, I hope) and started building out the foundations for this library, including some simple implementations of Registration and User Sign In flows with some nice functional tests to keep it easy to test.

However, this post is going to delve into authorization (Using the [Authorize] attribute); Which is to say, how do we confirm a user is signed in, after they have signed in without needing them to constantly provide their login credentials and creating an E2E automated testing framework which can provide all the insight we need for building out our Authentication and Authorization flows going forward.

So our first step here, will be to look at how we need to address different types of consuming applications, as we're essentially building a library that can be included in

- A web API - where we will need to utilize something like JWT

- A desktop application - where we will need to use some form of secured storage on the local system.

- A more traditional .NET web app, such as an MVC site - where we can utilize cryptographically secured cookies.

So the first step here I suppose, will be to ensure that I have reliable testing infrastructure in place to ensure that I can easily test for all the above scenario's. As such I'll need to add a new MVC project and a unit testing project for the MVC app into my solution and add a controller so that I can use it as a nice little testing rig for my cookie based authentication flow.

As we start with a small HomeController I'll just utilize that initially to test out the integration testing mechanisms and ensure that I can access the correct data from the contexts related to it.

So the way for us to move forward here, is to build out some tests within our new testing project and provide a similar setup as we did for the API testing, this time we can use the TestServer that is exposed by the Microsoft.AspNetCore.TestHost package.

public class HomeController : Controller

{

private readonly ILogger<HomeController> _logger;

public HomeController(ILogger<HomeController> logger)

{

_logger = logger;

}

public IActionResult Index()

{

return View();

}

public IActionResult Privacy()

{

return View();

}

[ResponseCache(Duration = 0, Location = ResponseCacheLocation.None, NoStore = true)]

public IActionResult Error()

{

return View(new ErrorViewModel {RequestId = Activity.Current?.Id ?? HttpContext.TraceIdentifier});

}

}

using Microsoft.AspNetCore.Hosting;

using Microsoft.AspNetCore.TestHost;

namespace ExpeditionAuth.MVC.Tests;

public class MvcAppTestFixture : IDisposable

{

public HttpClient Client { get; }

private readonly TestServer _server;

protected MvcAppTestFixture()

{

_server = new TestServer(new WebHostBuilder()

.UseStartup<Program>());

Client = _server.CreateClient();

}

public void Dispose()

{

_server.Dispose();

Client.Dispose();

}

}

using System.Net;

namespace ExpeditionAuth.MVC.Tests;

public class HomeTests : MvcAppTestFixture

{

private readonly HttpClient _client;

public HomeTests(MvcAppTestFixture fixture)

{

_client = fixture.Client;

}

[Fact]

public async Task HomePageShouldReturnSuccessStatusCode()

{

var response = await _client.GetAsync("/");

response.EnsureSuccessStatusCode();

Assert.Equal(HttpStatusCode.OK, response.StatusCode);

}

}

So, this is our first exploration into using TestServer, so my first concern is of course, ensuring that it works as described, so as you can see above we have a simple test to just ensure that we get the relevant Status Code back which is expected to work based on how the default MVC app works, any access to / should provide you a view with a status code.

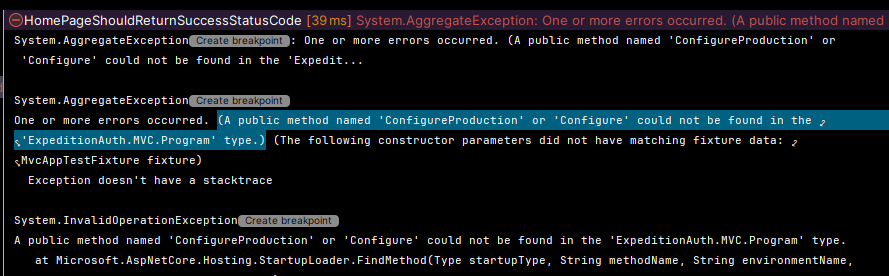

It was at this point, that I realized I had missed out on something; A standard MVC app is not built correctly to work with TestServer because it doesn't define a proper Configure method, which is helpfully reported to me when running my test.

As such, we need to modify our program.cs to act in the way we require, by changing it to match the following

namespace ExpeditionAuth.MVC;

public class Program

{

public static void Main(string[] args)

{

CreateHostBuilder(args).Build().Run();

}

public static IHostBuilder CreateHostBuilder(string[] args) =>

Host.CreateDefaultBuilder(args)

.ConfigureWebHostDefaults(webBuilder =>

{

webBuilder.UseStartup<Startup>();

});

}

Additionally, we need to add a new startup.cs which will basically do everything that Program.cs was doing before, but allows it to utilize the expected methods to setup a proper test server.

namespace ExpeditionAuth.MVC;

public class Startup

{

public void ConfigureServices(IServiceCollection services)

{

services.AddControllersWithViews();

}

public void Configure(IApplicationBuilder app, IWebHostEnvironment env)

{

if (env.IsDevelopment())

{

app.UseDeveloperExceptionPage();

}

else

{

app.UseExceptionHandler("/Home/Error");

app.UseHsts();

}

app.UseHttpsRedirection();

app.UseStaticFiles();

app.UseRouting();

app.UseAuthentication();

app.UseAuthorization();

app.UseEndpoints(endpoints =>

{

endpoints.MapControllerRoute(

name: "default",

pattern: "{controller=Home}/{action=Index}/{id?}");

});

}

}

And now that we've moved this logic out, we can update the Fixture to provide the new class as required

public MvcAppTestFixture()

{

_server = new TestServer(new WebHostBuilder()

.UseStartup<Startup>());

Client = _server.CreateClient();

}

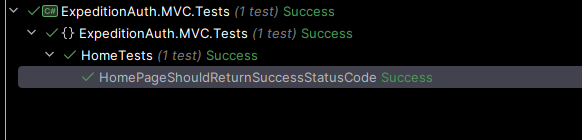

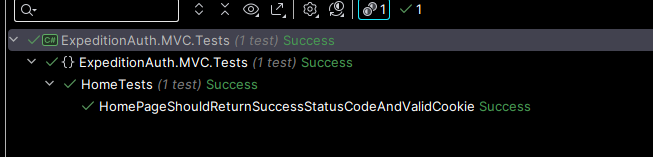

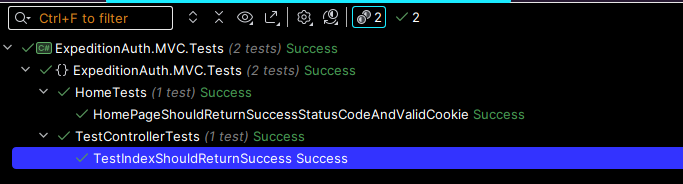

Which then gives me a wonderful green light on my test, to say that it has what's required!

So my next question is, how the hell do I use cookies with TestServer and HttpClient so that I can test out the session based logic going on, there doesn't seem to be anything attached to _client or response that would enable me to access the cookies, but there must be a way!

So, I'll stop myself going further down this rabbit hole here and taking you with me, This did not work, the TestServer just doesn't seem to have a way that allows us to access the cookies properly, we can get a header that specifies the Set-Cookie syntax and would tell the browser or similar consuming application to create a cookie, however there was no way to actually pass this back up while utilizing this mechanism; Very sad, very frustrating, but valuable knowledge for us to take away.

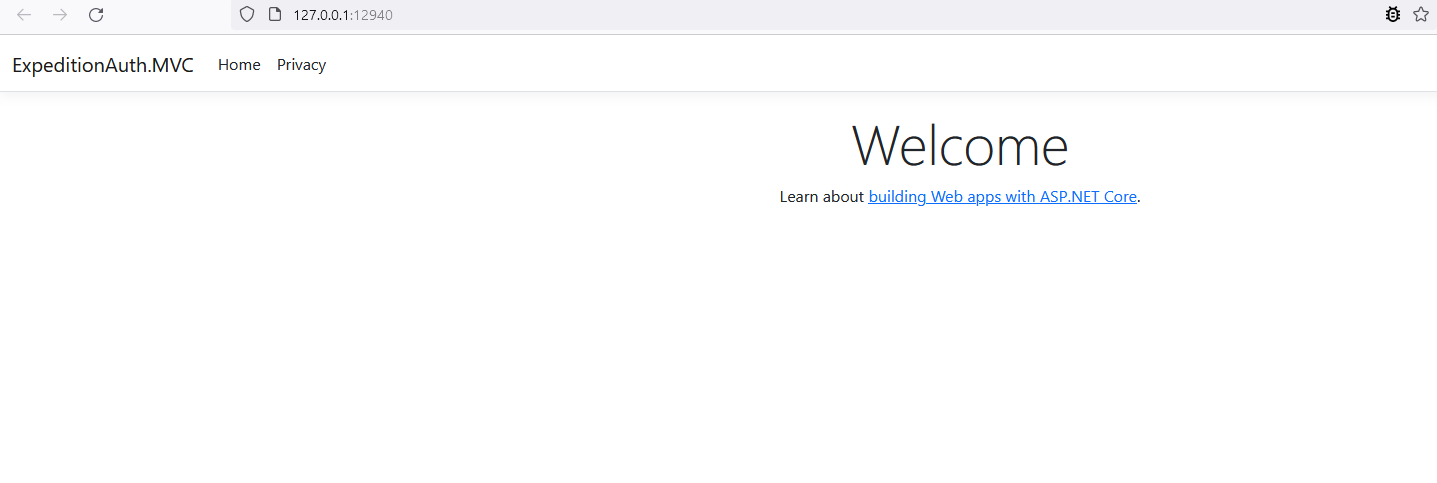

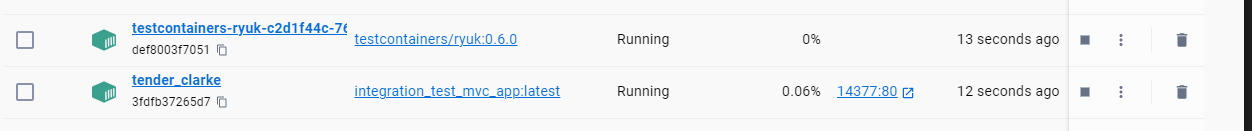

Fortunately, I wasn't quite done and decided the best way to actually handle this would be to run the MVC app in a container! So I added docker to my MVC app using the right click Add Docker in VS/Rider and ran the container in debug mode to make sure it still works (I've made quite a lot of changes to program/startup so it's good to check!) and all seems well!

So the next step is to determine how I can run this utilizing Test Containers on start up of the ClassFixture so that we can run a proper HttpClient and call the URLs properly. So for those of you not familiar with Docker, we need to get this up and running outside of VS so that TestContainers can interact with the container itself.

So the first step is to open up a terminal window in the same directory as the new dockerfile that was created in the previous steps at the root of the ExpeditionAuth.MVC project and run docker build at which time you can furnish it with a name for the image to be stored as and then we can utilize docker run to run it, however TestContainers should manage the docker run part itself. It's enough to know, that all we need to do is trigger the docker build and pass the name into TestContainers so that it can run our container and provide us with a safe port and all the relevant disposal of the container when we're done.

Now, obviously, we don't want to have to run this manually every time that we want to run our tests, so we need to run the docker build from within the constructor of our fixture, this will mean that we have control over the name and are able to pass that through as needed like so

using System.Diagnostics;

using DotNet.Testcontainers.Builders;

using TestContainers.Container.Abstractions;

using TestContainers.Container.Abstractions.Hosting;

namespace ExpeditionAuth.MVC.Tests;

public class MvcAppTestFixture : IDisposable

{

private const string ImageName = "integration_test_mvc_app:latest";

private const string DockerImagePath = @"..\..\..\..";

public HttpClient Client { get; }

public MvcAppTestFixture()

{

BuildDockerImage();

var container = new ContainerBuilder()

.WithImage(ImageName)

.WithPortBinding(80, true)

.WithWaitStrategy(Wait.ForUnixContainer().UntilHttpRequestIsSucceeded(r => r.ForPort(80)))

.Build();

container.StartAsync().Wait();

var requestUri = new UriBuilder(Uri.UriSchemeHttp, container.Hostname, container.GetMappedPublicPort(80), "/").Uri;

var client = new HttpClient();

var response = client.GetAsync(requestUri).GetAwaiter().GetResult();

}

private static void BuildDockerImage()

{

var psi = new ProcessStartInfo

{

FileName = "docker",

Arguments = $"build -t {ImageName} -f ./ExpeditionAuth.MVC/Dockerfile .",

WorkingDirectory = DockerImagePath,

RedirectStandardOutput = true,

RedirectStandardError = true,

UseShellExecute = false,

CreateNoWindow = true

};

using var process = Process.Start(psi);

process?.WaitForExit();

if (process is {ExitCode: 0}) return;

var errorMessage = process?.StandardError.ReadToEnd();

throw new Exception($"Docker build failed with error: {errorMessage}");

}

public void Dispose()

{

Client.Dispose();

}

}

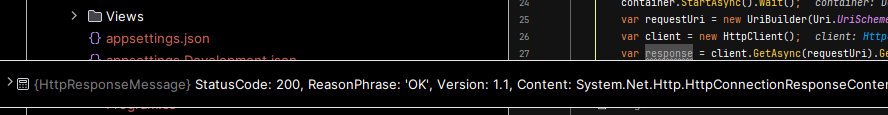

While this is a bit of a mess at the moment, It does provide me with the ability to just sanity check that the implementation will work, and then I can get rid of the client call within the constructor and store the Uri and container references so that the calling class can access the Uri and provide it's own HttpClient to ensure that tests are standalone and are not affected by any leftovers from other calls to the app. So I'll now debug the constructor of this and see if it does indeed do what I want.

Annoyingly frustrating issue here, you must run the docker build from the path that has the sln file, NOT the docker file, calling ./ExpeditionAuth.MVC/Dockerfile as the -f parameter this has been reflected above using the "WorkingDirectory" property of ProcessStartInfo

And wonderfully, it looks like it's working exactly how I wanted and I can exit this particularly painful TestServer rabbit hole with only a few hours wasted! So, now that I know this approach works, I can modify the code a bit, clean it up and get it ready for actual use in some testing scenarios and just a quick double check to ensure that cookies work as intended will be my very first test case.

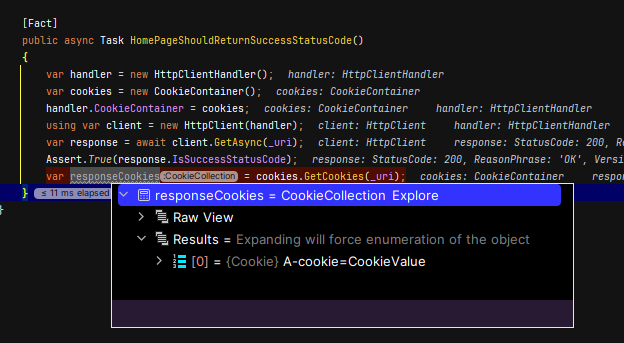

So beautifully enough, when I pop in my CookieContainer and HttpClientHandler against this context, I get a lovely view in debug mode of the cookie I've set within the Index method.

public IActionResult Index()

{

var options = new CookieOptions

{

Expires = DateTime.Now.AddDays(1) // The cookie will expire in 1 day

};

Response.Cookies.Append("A-cookie", "CookieValue", options);

return View();

}

Which just provides me with the evidence that I require to move forward with this approach, as such I'll finish cleaning up the Test class now as well.

using System.Net;

namespace ExpeditionAuth.MVC.Tests;

public class HomeTests : IClassFixture<MvcAppTestFixture>

{

private readonly Uri _uri;

public HomeTests(MvcAppTestFixture fixture)

{

_uri = fixture.MvcAppUri;

}

[Fact]

public async Task HomePageShouldReturnSuccessStatusCodeAndValidCookie()

{

var handler = new HttpClientHandler();

var cookies = new CookieContainer();

handler.CookieContainer = cookies;

using var client = new HttpClient(handler);

var response = await client.GetAsync(_uri);

Assert.True(response.IsSuccessStatusCode);

var responseCookies = cookies.GetCookies(_uri);

var expectedCookie = responseCookies.FirstOrDefault(c => c.Name == "A-cookie");

Assert.NotNull(expectedCookie);

Assert.Equal("CookieValue", expectedCookie?.Value);

}

}

using System.Diagnostics;

using DotNet.Testcontainers.Builders;

using IContainer = DotNet.Testcontainers.Containers.IContainer;

namespace ExpeditionAuth.MVC.Tests;

public class MvcAppTestFixture : IDisposable

{

private const string ImageName = "integration_test_mvc_app:latest";

private const string DockerImagePath = @"..\..\..\..";

public Uri MvcAppUri;

private IContainer _mvcContainer;

public MvcAppTestFixture()

{

BuildDockerImage();

_mvcContainer = new ContainerBuilder()

.WithImage(ImageName)

.WithPortBinding(80, true)

.WithWaitStrategy(Wait.ForUnixContainer().UntilHttpRequestIsSucceeded(r => r.ForPort(80)))

.Build();

_mvcContainer.StartAsync().Wait();

MvcAppUri = new UriBuilder(Uri.UriSchemeHttp, _mvcContainer.Hostname, _mvcContainer.GetMappedPublicPort(80)).Uri;

}

private static void BuildDockerImage()

{

var psi = new ProcessStartInfo

{

FileName = "docker",

Arguments = $"build -t {ImageName} -f ./ExpeditionAuth.MVC/Dockerfile .",

WorkingDirectory = DockerImagePath,

RedirectStandardOutput = true,

RedirectStandardError = true,

UseShellExecute = false,

CreateNoWindow = true

};

using var process = Process.Start(psi);

process?.WaitForExit();

if (process is {ExitCode: 0}) return;

var errorMessage = process?.StandardError.ReadToEnd();

throw new Exception($"Docker build failed with error: {errorMessage}");

}

public void Dispose()

{

_mvcContainer.StopAsync().Wait();

_mvcContainer.DisposeAsync().GetAwaiter().GetResult();

}

}

And that confirms that my tests can now perform as needed! Which is wonderful; Unfortunately there is one last thing to do here, and that is to find a way to provide the connection string for this docker based server, so that we can utilize a transient SQL Server for the MVC App, in the same vein as the web api test infrastructure. As we're in docker, it's quite easy for us to get pass in environment variables, so for the sake of ease, we can add our connection string to the docker run command then utilize environment variables to retrieve the connection string and pass it through to Expedition.Auth as part of the DI setup process.

Thankfully, TestContainers makes this a trivial experience as it exposes a .WithEnvironment() method which allows me to pass the connection string through with minimal fuss, so all that's left is to add the code to spin up the db container and pass the string through.

using System.Diagnostics;

using DotNet.Testcontainers.Builders;

using Testcontainers.MsSql;

using IContainer = DotNet.Testcontainers.Containers.IContainer;

namespace ExpeditionAuth.MVC.Tests;

public class MvcAppTestFixture : IDisposable

{

private const string ImageName = "integration_test_mvc_app:latest";

private const string DockerImagePath = @"..\..\..\..";

public Uri MvcAppUri;

private IContainer _mvcContainer;

private readonly MsSqlContainer _db;

public MvcAppTestFixture()

{

_db = new MsSqlBuilder().Build();

_db.StartAsync().Wait();

BuildDockerImage();

_mvcContainer = new ContainerBuilder()

.WithImage(ImageName)

.WithEnvironment("connectionString", _db.GetConnectionString())

.WithPortBinding(80, true)

.WithWaitStrategy(Wait.ForUnixContainer().UntilHttpRequestIsSucceeded(r => r.ForPort(80)))

.Build();

_mvcContainer.StartAsync().Wait();

MvcAppUri = new UriBuilder(Uri.UriSchemeHttp, _mvcContainer.Hostname, _mvcContainer.GetMappedPublicPort(80)).Uri;

}

private static void BuildDockerImage()

{

var psi = new ProcessStartInfo

{

FileName = "docker",

Arguments = $"build -t {ImageName} -f ./ExpeditionAuth.MVC/Dockerfile .",

WorkingDirectory = DockerImagePath,

RedirectStandardOutput = true,

RedirectStandardError = true,

UseShellExecute = false,

CreateNoWindow = true

};

using var process = Process.Start(psi);

process?.WaitForExit();

if (process is {ExitCode: 0}) return;

var errorMessage = process?.StandardError.ReadToEnd();

throw new Exception($"Docker build failed with error: {errorMessage}");

}

public void Dispose()

{

_mvcContainer.StopAsync().Wait();

_mvcContainer.DisposeAsync().GetAwaiter().GetResult();

_db.StopAsync().Wait();

}

}

Now, this leaves me with one last thing to do, which is to build out to procs and tables on this new transient database in the same way as I did when I build out the ApiWebApplicationFactory which I can do in much the same way, however I think it's time to address that as a particular concern and ensure that we move the code that sets the db up into a new helper method that both test projects can share and call as needed so that if I make a change to it, it's automatically propagated through both sets of tests.

So I'll create a new class library called ExpeditionAuth.Test.Helpers and add a class called DatabaseHelper which will expose a BuildTestDatabase extension method; this method will be responsible for starting the container and running all the script files against it.

using System.Data.SqlClient;

using Testcontainers.MsSql;

namespace ExpeditionAuth.Test.Helpers;

public static class DatabaseHelper

{

public static MsSqlContainer BuildTestDatabase(this MsSqlContainer db)

{

db.StartAsync().Wait();

InitializeDatabase(db.GetConnectionString()).GetAwaiter().GetResult();

return db;

}

private static async Task InitializeDatabase(string connectionString)

{

await using var connection = new SqlConnection(connectionString);

await connection.OpenAsync();

var baseDir = @"..\..\..\..\Expedition.Auth\Schema";

var files = new List<string>

{

$@"{baseDir}\Tables\UserAccounts.sql",

$@"{baseDir}\StoredProcedures\RegisterUser.sql",

$@"{baseDir}\StoredProcedures\GetUserByUsername.sql"

};

foreach (var file in files)

{

var fullScript = await File.ReadAllTextAsync(file);

//we should split these on any "GO" commands as they cannot be included in SqlCommands

var scripts = fullScript.Split("GO");

foreach (var script in scripts)

{

await using var command = new SqlCommand(script, connection);

await command.ExecuteNonQueryAsync();

}

}

}

}

Which means that calling it and provisioning my full database becomes as simple as

using System.Diagnostics;

using DotNet.Testcontainers.Builders;

using ExpeditionAuth.Test.Helpers;

using Testcontainers.MsSql;

using IContainer = DotNet.Testcontainers.Containers.IContainer;

namespace ExpeditionAuth.MVC.Tests;

public class MvcAppTestFixture : IDisposable

{

private const string ImageName = "integration_test_mvc_app:latest";

private const string DockerImagePath = @"..\..\..\..";

public Uri MvcAppUri;

private IContainer _mvcContainer;

private readonly MsSqlContainer _db;

public MvcAppTestFixture()

{

_db = new MsSqlBuilder().Build().BuildTestDatabase();

BuildDockerImage();

_mvcContainer = new ContainerBuilder()

.WithImage(ImageName)

.WithEnvironment("connectionString", _db.GetConnectionString())

.WithPortBinding(80, true)

.WithWaitStrategy(Wait.ForUnixContainer().UntilHttpRequestIsSucceeded(r => r.ForPort(80)))

.Build();

_mvcContainer.StartAsync().Wait();

MvcAppUri = new UriBuilder(Uri.UriSchemeHttp, _mvcContainer.Hostname, _mvcContainer.GetMappedPublicPort(80)).Uri;

}

private static void BuildDockerImage()

{

var psi = new ProcessStartInfo

{

FileName = "docker",

Arguments = $"build -t {ImageName} -f ./ExpeditionAuth.MVC/Dockerfile .",

WorkingDirectory = DockerImagePath,

RedirectStandardOutput = true,

RedirectStandardError = true,

UseShellExecute = false,

CreateNoWindow = true

};

using var process = Process.Start(psi);

process?.WaitForExit();

if (process is {ExitCode: 0}) return;

var errorMessage = process?.StandardError.ReadToEnd();

throw new Exception($"Docker build failed with error: {errorMessage}");

}

public void Dispose()

{

_mvcContainer.StopAsync().Wait();

_mvcContainer.DisposeAsync().GetAwaiter().GetResult();

_db.StopAsync().Wait();

}

}

And then when we get back to the API we can easily add it in there as well! Now the final part we need to address is passing the connection string through, which luckily is fairly simple, and simply requires modifying the MVC start up like so

public void ConfigureServices(IServiceCollection services)

{

services.AddControllersWithViews();

var connString = Environment.GetEnvironmentVariable("ExpeditionAuthConnectionString");

if (string.IsNullOrEmpty(connString))

throw new InvalidOperationException("Environment.ExpeditionAuthConnectionString must be set");

services.AddExpeditionAuth(connString);

}

And updating the WithEnvironment call in the test fixture to pass the correct name through.

public MvcAppTestFixture()

{

_db = new MsSqlBuilder().Build().BuildTestDatabase();

BuildDockerImage();

_mvcContainer = new ContainerBuilder()

.WithImage(ImageName)

.WithEnvironment("ExpeditionAuthConnectionString", _db.GetConnectionString())

.WithPortBinding(80, true)

.WithWaitStrategy(Wait.ForUnixContainer().UntilHttpRequestIsSucceeded(r => r.ForPort(80)))

.Build();

_mvcContainer.StartAsync().Wait();

MvcAppUri = new UriBuilder(Uri.UriSchemeHttp, _mvcContainer.Hostname, _mvcContainer.GetMappedPublicPort(80)).Uri;

}

And then really quickly testing this through, we'll create an MVC controller that talks to the DB and returns an appropriate status for us

using Expedition.Auth.Services;

using Microsoft.AspNetCore.Mvc;

namespace ExpeditionAuth.MVC.Controllers;

public class TestController : Controller

{

private readonly ILogger<TestController> _logger;

private readonly IAuthenticationService _authenticationService;

public TestController(ILogger<TestController> logger, IAuthenticationService authenticationService)

{

_logger = logger;

_authenticationService = authenticationService;

}

public async Task<IActionResult> Index()

{

await _authenticationService.RegisterUserAsync("test", "test");

return View();

}

}

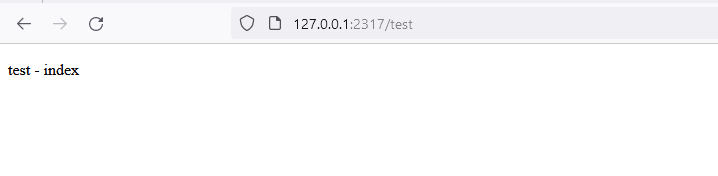

And then within my normal MVC IIS express debugging session, I can see that the result is returning as intended from the RegiserUserAsync method.

I would also like to jump in here and say how nostalgic it is to setup a vanilla dotnet MVC project again, it's been a few years to say the least!

I however do not have a view yet for this, so we can't actually get a 200 response from the API, as such we'll add a simple new view to match the controller location so that I can create a test that will be able to consume the 200 response.

@{

Layout = null;

}

<!DOCTYPE html>

<html>

<head>

<title>title</title>

</head>

<body>

<div>

<p>test - index</p> </div>

</body>

</html>

namespace ExpeditionAuth.MVC.Tests;

public class TestControllerTests : IClassFixture<MvcAppTestFixture>

{

private readonly Uri _uri;

public TestControllerTests(MvcAppTestFixture fixture)

{

_uri = fixture.MvcAppUri;

}

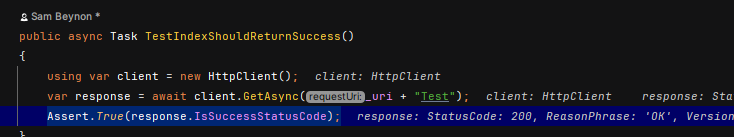

[Fact]

public async Task TestIndexShouldReturnSuccess()

{

using var client = new HttpClient();

var response = await client.GetAsync(_uri + "/Test/Index");

Assert.True(response.IsSuccessStatusCode);

}

}

And now the remaining issue lies in the fact that I've been a bit forgetful, and forgot that the connection string for the database is going to be localhost which isn't going to work for a docker container running the app, because the DB will not be on the same instance as the MVC App, therefore we need to modify the connection string that we pass through to the MVC app so that it can correctly route via the host OS (my pc) and provide a connection.

public MvcAppTestFixture()

{

_db = new MsSqlBuilder().Build().BuildTestDatabase();

var connectionString = _db.GetConnectionString();

connectionString = connectionString.Replace("127.0.0.1", "host.docker.internal");

BuildDockerImage();

_mvcContainer = new ContainerBuilder()

.WithImage(ImageName)

.WithEnvironment("ExpeditionAuthConnectionString", connectionString)

.WithPortBinding(8080, true)

.WithWaitStrategy(Wait.ForUnixContainer().UntilHttpRequestIsSucceeded(r => r.ForPort(8080)))

.Build();

_mvcContainer.StartAsync().Wait();

MvcAppUri = new UriBuilder(Uri.UriSchemeHttp, _mvcContainer.Hostname, _mvcContainer.GetMappedPublicPort(8080)).Uri;

}

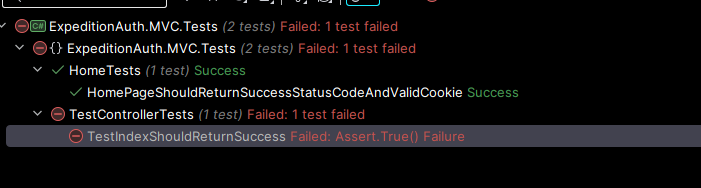

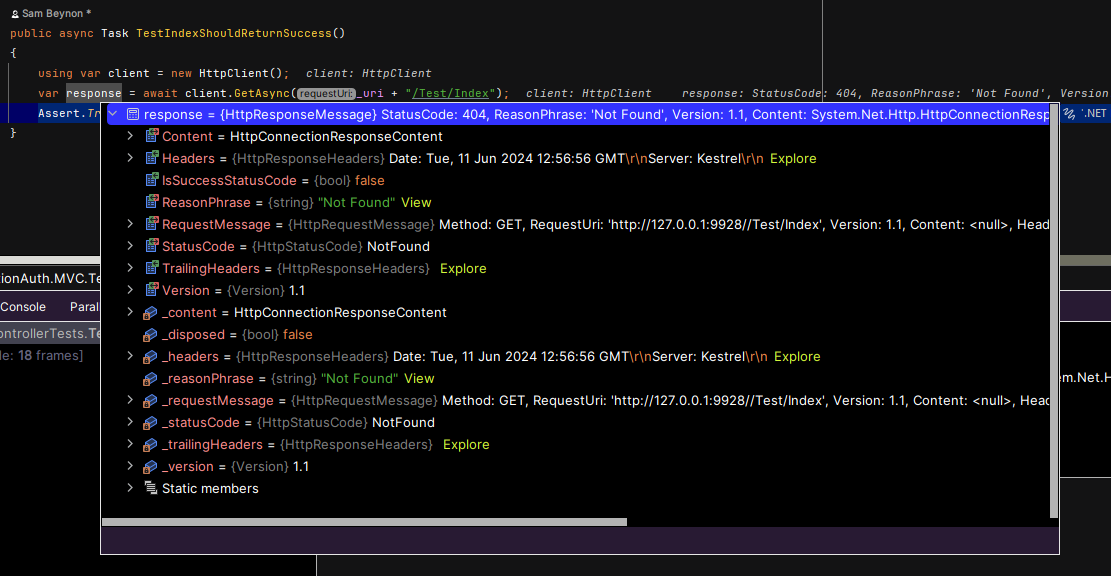

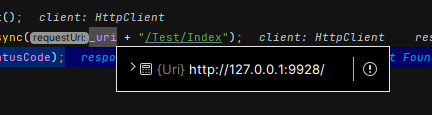

You'll notice also that i've changed the ports from 80 to 8080, this is apparently a new standard port set within dotnet 8 which I have now re-updated back to, now that I've solved the MapDataReader issue from Part 1 (Which turned out to be an issue with my local rather than the package, for some reason the compiler just refused to be useful!). At any rate, we can now see that the Home tests run fine, as they don't rely on anything special, however the new Test controller doesn't report a passing test.

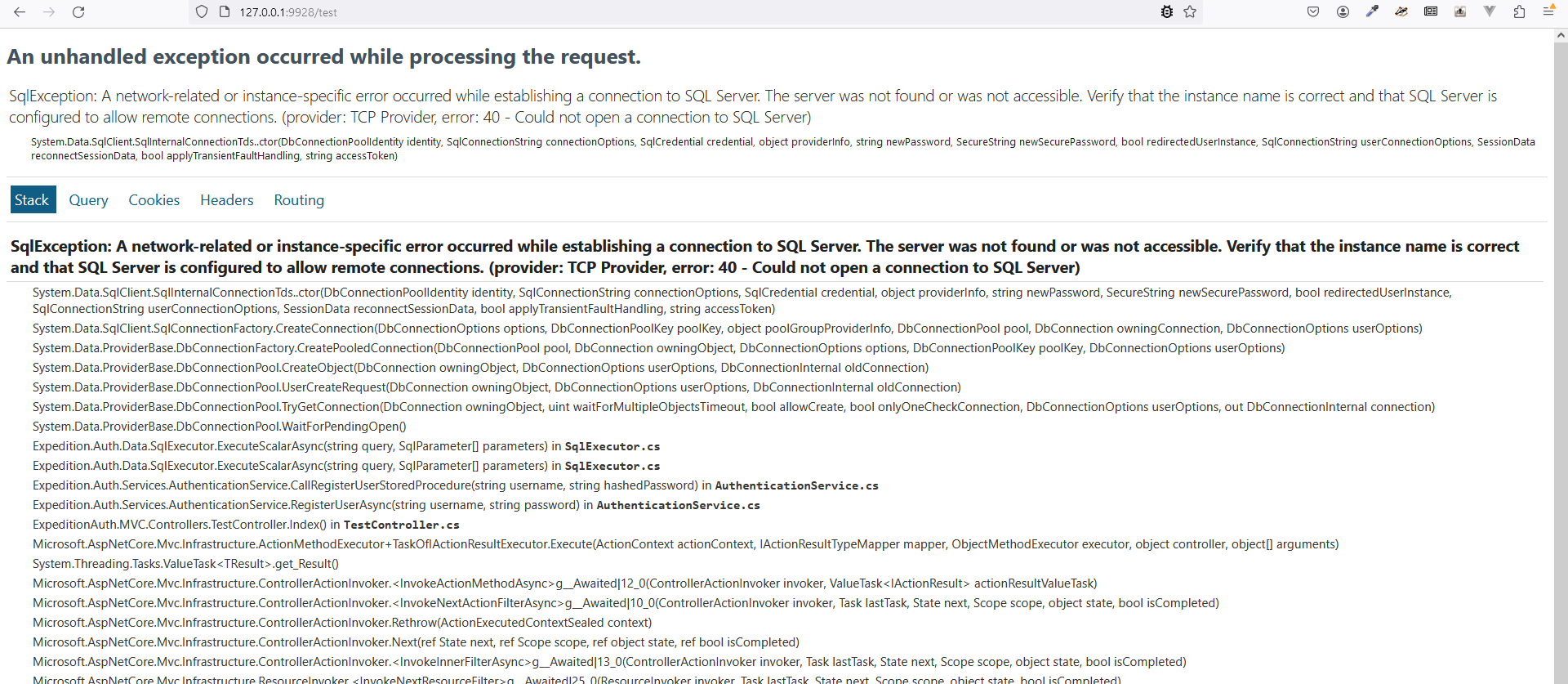

And this here, displays the problem with this specific type of testing, I can't actually debug when running the test because it relies on the docker container that isn't attached to my debugger, but what I can do is manually run the test and identify what the issue is, we know it's only happening on that page so I'll run the MVC app in debug mode and it'll likely tell me what the problem is.

It however, does not, which is frustrating, but due to the simplicity here, I suspect it means that there's a problem with the database connection, the advantage of having very few moving parts is that it's easy to make a guess like this, and it's probably going to be correct. So I'll pass the relevant environment variable through to the docker container to enable development errors so we can see the exact exception that's being thrown.

.WithEnvironment("ASPNETCORE_ENVIRONMENT", "Development")

And now, if I put a breakpoint into my method, I can read the return content, but equally if I don't get enough information in the response then I can navigate directly to the page, remember it's a container so it's quite literally a full MVC app running as normal or check the stdout of the container to see if the error has been emitted to the console.

And sure enough, as anticipated, the container cannot access the database, which is... quite annoying! However completely anticipatable due to the fact that when I was ensuring something ran yesterday before finishing writing this post for the day, I had in fact, commented out the line we need...

Woopsy! So i'll comment out the local AddExpeditionAuth just in case I need it later and uncomment the lines we care about and we can move on. (I hope... We'll definitely put a better way to separate these 2 connection string automatically later on!)

Unfortunately, I've been a bit of a dunce and put /test/index into the test instead of just test and so the test is still failing on a 404, but that's a nice quick and simple change! (Noting that the Uri already has the trailing '/' so we don't need that either!)

AND THAT, is my MVC Back-end E2E testing framework in place that will enable me to test the authentication flow properly.

You'll notice that my focus has moved into just providing an MVC layer for the moment, this is mostly because I want to use this project as a nuget package in another series of posts i'm creating and I can focus on adding support for other application types later.

And the beauty is we'll be able to easily reproduce this for a web API later on now that we have the basics in place, and I suspect for a desktop application that we'll be able to utilize the relevant mechanisms directly within a "unit test" context as it's local machine based.

But now, the moment I'm sure we've all been waiting for, some implementation! So the first thing we need to do is hook into the authentication layer that exists within the Dotnet framework and tell it we want to use cookies and provide some sane defaults for the cookie settings. As such to the AddExpeditionAuth method, we could add something along the lines of this

services.AddAuthentication(CookieAuthenticationDefaults.AuthenticationScheme)

.AddCookie(options =>

{

options.Cookie.HttpOnly = true;

options.Cookie.SecurePolicy = CookieSecurePolicy.Always;

options.Cookie.SameSite = SameSiteMode.Strict;

options.Cookie.Name = "Some Cooke name here";

options.TicketDataFormat = new CustomSecureDataFormat();

options.Events = new CookieAuthenticationEvents

{

OnValidatePrincipal = context =>

{

return Task.CompletedTask;

}

};

});

However that will drag along a lot of Asp Net dependencies that may not be needed for all apps that wish to utilize the library, as such, we're going to move this part of the functionality into a separate class library which can provide the additional types and "proxy" the calls through to the core library for anything that should be shared between all implementations (such as username and password validation etc) as such, I'll create a new class library called Expedition.Auth.Cookies which can be released as an individual NuGet package when we get to that stage.

So the first thing we're going to look at is actually creating the cookie and ensuring that it has all the information it requires while being nice and secure. So we'll provide a new service as part of the Cookie package which exposes a new ValidateUserAsync method that will proxy down through to the underlying service in the core package, while building it out and ensuring the cookie is added to the HttpContext.

using System.Text.Json;

using Expedition.Auth.Data;

using Expedition.Auth.Results;

using Expedition.Auth.Services;

using Microsoft.AspNetCore.DataProtection;

using Microsoft.AspNetCore.Http;

namespace Expedition.Auth.Cookies.Services;

public class CookieAuthenticationService : AuthenticationService {

private readonly IDataProtector _dataProtector;

private readonly IHttpContextAccessor _httpContextAccessor;

public CookieAuthenticationService(ISqlExecutor sqlExecutor, IDataProtectionProvider dataProtectionProvider, IHttpContextAccessor httpContextAccessor) : base(sqlExecutor)

{

_dataProtector = dataProtectionProvider.CreateProtector("expedition-authentication");

_httpContextAccessor = httpContextAccessor;

}

public override async Task<ValidateUserResult> ValidateUserAsync(string username, string password)

{

var userValidatedResponse = await base.ValidateUserAsync(username, password);

if (userValidatedResponse.ResultCode != ValidateUserResultCode.Success) return userValidatedResponse;

var serializedUserDetails = JsonSerializer.Serialize(userValidatedResponse.User);

var encryptedUserDetails = _dataProtector.Protect(serializedUserDetails);

var cookieOptions = new CookieOptions

{

Path = "/",

HttpOnly = true,

Secure = true,

IsEssential = true,

Expires = DateTimeOffset.Now.AddMinutes(30) //ToDo: Ensure that expiry can be set as a configuration option.

};

_httpContextAccessor.HttpContext?.Response.Cookies.Append("expedition-auth", encryptedUserDetails, cookieOptions);

return userValidatedResponse;

}

}

Now, an important aspect here is that we need to ensure that we override the registration of the IAuthenticationService that is registered within the core library with our new CookieAuthenticationService which we can do with the following

using Expedition.Auth.Cookies.Services;

using Expedition.Auth.DependencyInjection;

using Expedition.Auth.Services;

using Microsoft.Extensions.DependencyInjection;

namespace Expedition.Auth.Cookies.DependencyInjection;

public static class ServiceCollectionExtensions

{

public static IServiceCollection AddExpeditionCookieAuth(this IServiceCollection services, string connectionString)

{

services.AddExpeditionAuth(connectionString);

var serviceDescriptor = services.FirstOrDefault(descriptor => descriptor.ServiceType == typeof(IAuthenticationService));

if (serviceDescriptor != null)

{

services.Remove(serviceDescriptor);

}

services.AddScoped<IAuthenticationService, CookieAuthenticationService>();

return services;

}

}

This will now delete and add the new implementation in it's place, ensuring that if users have specified that they want to use cookies, they get the correct implementation of the service.

Which is lovely, and means that all we need to do now is update the startup.cs to utilize the correct DI extension and add the IHttpContextAccessor into the DI at the root level, we should enforce this as an installation step so that we don't have to include all the underlying packages that would need to be bundled in our package if we included them as the relevant ones can get pretty large sometimes!

public void ConfigureServices(IServiceCollection services)

{

services.AddControllersWithViews();

services.AddHttpContextAccessor();

var connString = Environment.GetEnvironmentVariable("ExpeditionAuthConnectionString");

// services.AddExpeditionAuth("Server=(local);Database=Expedition;Trusted_Connection=True;");

if (string.IsNullOrEmpty(connString))

throw new InvalidOperationException("Environment.ExpeditionAuthConnectionString must be set");

services.AddExpeditionCookieAuth(connString);

}

And now that we have this happening, we can create a test to validate that the specific cookie has been added to the response as well as a test that will essentially determine if we are authorized or not which will fail at time of writing, so that we are able to use it as a determination for success of the next part of our implementation, which will be to implement the necessary steps to allow for the utilization of the [Authorize] attribute on our controller methods.

It was at this point that I encountered a strange issue when attempting to run my tests again, which I was able to she some light on by running the docker build command directly from my terminal, I was receiving errors stating that a lot of Microsoft.AspNetCore.* packages could not be included within the packed application, this is due to deprecation and that they are now included as a Framework reference instead, as such adding the following to my Expedition.Auth.Cookies.csproj fixed the problem

<ItemGroup>

<FrameworkReference Include="Microsoft.AspNetCore.App" />

</ItemGroup>

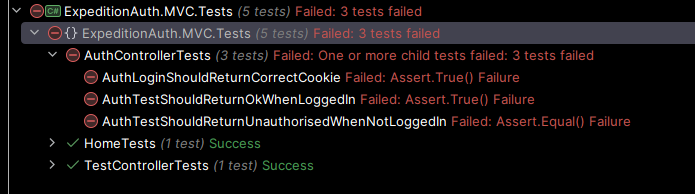

As such, I've now created 3 new tests that test the functionality that I care about, and they're fairly simple, we have one that tests the E2E flow of a user registering and then logging in (and receiving their cookie) as well as a test that calls an endpoint decorated with the [Authorize] while not logged in and a third test to ensure that calling the same endpoint while logged in returns an expected 200 response.

using System.Net;

using System.Text;

using System.Text.Json;

namespace ExpeditionAuth.MVC.Tests;

public class AuthControllerTests : IClassFixture<MvcAppTestFixture>

{

private readonly Uri _uri;

public AuthControllerTests(MvcAppTestFixture fixture)

{

_uri = fixture.MvcAppUri;

}

[Fact]

public async Task AuthLoginShouldReturnCorrectCookie()

{

var userModel = new {Username = "test", Password = "test"};

var handler = new HttpClientHandler();

var cookies = new CookieContainer();

handler.CookieContainer = cookies;

using var client = new HttpClient(handler);

var json = JsonSerializer.Serialize(userModel);

var content = new StringContent(json, Encoding.UTF8, "application/json");

var response = await client.PostAsync(_uri + "auth/register", content);

Assert.True(response.IsSuccessStatusCode);

response = await client.PostAsync(_uri + "auth/login", content);

Assert.True(response.IsSuccessStatusCode);

var responseCookies = cookies.GetCookies(_uri);

var expectedCookie = responseCookies.FirstOrDefault(c => c.Name == "expedition-auth");

Assert.NotNull(expectedCookie);

}

[Fact]

public async Task AuthTestShouldReturnUnauthorisedWhenNotLoggedIn()

{

var handler = new HttpClientHandler();

var cookies = new CookieContainer();

handler.CookieContainer = cookies;

using var client = new HttpClient(handler);

var response = await client.GetAsync(_uri + "auth/test");

Assert.False(response.IsSuccessStatusCode);

Assert.Equal(HttpStatusCode.Unauthorized, response.StatusCode);

}

[Fact]

public async Task AuthTestShouldReturnOkWhenLoggedIn()

{

var userModel = new {Username = "test", Password = "test"};

var handler = new HttpClientHandler();

var cookies = new CookieContainer();

handler.CookieContainer = cookies;

using var client = new HttpClient(handler);

var json = JsonSerializer.Serialize(userModel);

var content = new StringContent(json, Encoding.UTF8, "application/json");

var response = await client.PostAsync(_uri + "auth/register", content);

Assert.True(response.IsSuccessStatusCode);

response = await client.PostAsync(_uri + "auth/login", content);

Assert.True(response.IsSuccessStatusCode);

var responseCookies = cookies.GetCookies(_uri);

var expectedCookie = responseCookies.FirstOrDefault(c => c.Name == "expedition-auth");

Assert.NotNull(expectedCookie);

response = await client.GetAsync(_uri + "auth/test");

Assert.True(response.IsSuccessStatusCode);

}

}

So now we're following proper "Test driven development" (or TDD) principles by building these tests out before we build out further functionality, and the theory here is that these tests are able to guide us and ensure that we build out the right functionality instead of writing tests to match the functionality that we do write after writing it. It also acts to validate an "acceptance criteria" (common term in commercial development), very much the same as writing a test plan that states "A user should be able to log in" or "When a user is logged in, they should be able to access content that is restricted", we've just represented these in code instead.

Ok, so now that we have our tests in place, and they're failing as we expect because the code does not exist yet, we can add our simple implementations, 2 of which are basically the same as we implemented within the API in part 1.

using Expedition.Auth.Services;

using Microsoft.AspNetCore.Authorization;

using Microsoft.AspNetCore.Mvc;

namespace ExpeditionAuth.MVC.Controllers;

public class AuthController : Controller

{

private readonly ILogger<AuthController> _logger;

private readonly IAuthenticationService _authenticationService;

public AuthController(ILogger<AuthController> logger, IAuthenticationService authenticationService)

{

_logger = logger;

_authenticationService = authenticationService;

}

public class LogInModel

{

public required string Username { get; set; }

public required string Password { get; set; }

}

[HttpPost]

public async Task<IActionResult> LogIn([FromBody] LogInModel model)

{

await _authenticationService.ValidateUserAsync(model.Username, model.Password);

return Ok();

}

[HttpPost]

public async Task<IActionResult> Register([FromBody] LogInModel model)

{

await _authenticationService.RegisterUserAsync(model.Username, model.Password);

return Ok();

}

[HttpGet]

[Authorize]

public async Task<IActionResult> Test()

{

return Ok();

}

}

The only new concept we've utilizing here is the [Authorize] attribute on the Test method, which is used to tell the dotnet pipeline that this method cannot be executed unless a user is signed in, in this case it means "If the user passes in a valid session cookie then return a 200 status code". A bit of hidden functionality here is that, if the cookie is invalid, or does not exist then it should return a 401 (Unauthorized) status code.

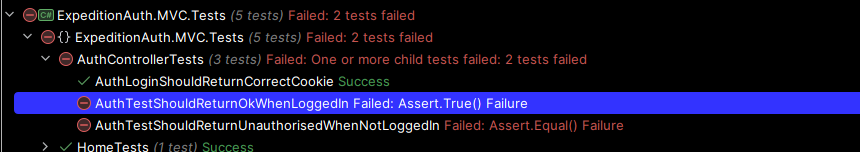

So now we have these methods in place, we can re-run the tests and see how much work there is to do!

Well that's problematic, I was expecting the Unauthorised to work out of the box and only need to implement a success criteria for the [Authorize] attribute, but I guess it's good, we get to learn some new stuff! So the best way for me to see this is to probably debug into the method on the assert line, check the response and if need be, check the container console to see if an exception is shared.

So while the response didn't really help me, because I don't have the response body being serialized in a way that enables me to read it easily, I didn't have to add it because the docker console contains a very nice error for me that tells me what the issue is

2024-06-12 18:51:25 fail: Microsoft.AspNetCore.Diagnostics.DeveloperExceptionPageMiddleware[1]

2024-06-12 18:51:25 An unhandled exception has occurred while executing the request.

2024-06-12 18:51:25 System.InvalidOperationException: No authenticationScheme was specified, and there was no DefaultChallengeScheme found. The default schemes can be set using either AddAuthentication(string defaultScheme) or AddAuthentication(Action<AuthenticationOptions> configureOptions).

2024-06-12 18:51:25 at Microsoft.AspNetCore.Authentication.AuthenticationService.ChallengeAsync(HttpContext context, String scheme, AuthenticationProperties properties)

2024-06-12 18:51:25 at Microsoft.AspNetCore.Authorization.Policy.AuthorizationMiddlewareResultHandler.<>c__DisplayClass0_0.<<HandleAsync>g__Handle|0>d.MoveNext()

2024-06-12 18:51:25 --- End of stack trace from previous location ---

2024-06-12 18:51:25 at Microsoft.AspNetCore.Authorization.AuthorizationMiddleware.Invoke(HttpContext context)

2024-06-12 18:51:25 at Microsoft.AspNetCore.Authentication.AuthenticationMiddleware.Invoke(HttpContext context)

2024-06-12 18:51:25 at Microsoft.AspNetCore.Diagnostics.DeveloperExceptionPageMiddlewareImpl.Invoke(HttpContext context)

So our next steps are to create an AuthenticationHandler which will be responsible for loading the relevant data from our cookie into an Identity object which dotnet can then utilize for the [Authorize] attribute to ensure a user is authenticated.

using System.Security.Claims;

using System.Text.Encodings.Web;

using System.Text.Json;

using Expedition.Auth.DbModels;

using Microsoft.AspNetCore.Authentication;

using Microsoft.AspNetCore.DataProtection;

using Microsoft.Extensions.Logging;

using Microsoft.Extensions.Options;

namespace Expedition.Auth.Cookies.AuthenticationHandlers;

public class ExpeditionCookieAuthenticationHandler : AuthenticationHandler<AuthenticationSchemeOptions>

{

private readonly IDataProtector _dataProtector;

public ExpeditionCookieAuthenticationHandler(IOptionsMonitor<AuthenticationSchemeOptions> options,

ILoggerFactory logger, UrlEncoder encoder, ISystemClock clock, IDataProtectionProvider dataProtectionProvider)

: base(options, logger, encoder, clock)

{

_dataProtector = dataProtectionProvider.CreateProtector("expedition-authentication");

}

protected override async Task<AuthenticateResult> HandleAuthenticateAsync()

{

var cookie = Request.Cookies["expedition-auth"];

if (string.IsNullOrEmpty(cookie)) AuthenticateResult.NoResult();

try

{

var unencryptedAuthData = _dataProtector.Unprotect(cookie!);

if (string.IsNullOrEmpty(unencryptedAuthData)) return AuthenticateResult.Fail("Invalid cookie");

var userModel = JsonSerializer.Deserialize<UserAccountModel>(unencryptedAuthData);

if(userModel?.Username == null) return AuthenticateResult.Fail("Invalid cookie");

var claims = new List<Claim>

{

new(ClaimTypes.Name, userModel.Username)

};

var claimsIdentity = new ClaimsIdentity(claims, nameof(ExpeditionCookieAuthenticationHandler));

var claimsPrincipal = new ClaimsPrincipal(claimsIdentity);

var ticket = new AuthenticationTicket(claimsPrincipal, Scheme.Name);

return AuthenticateResult.Success(ticket);

}

catch (Exception e)

{

Console.WriteLine(e);

return AuthenticateResult.Fail(e);

}

}

}

So there's quite a bit going on here, but to break it down into steps we're

- Reading the data from the cookie and failing the authentication check if it's empty or null

- Unencrypting the data that we retrieved from the cookie, deserializing it into a

UserAccountModelobject and failing the authentication check if the username is null - Creating a new

ClaimsIdentitywhich is what dotnet uses behind the signs as an "identity" and adding a claim of the usersUsernameso that we can access that information again without having to check the cookie, or the database. - Incorporating that

ClaimsIdentityinto anAuthenticationTicketwhich is then utilized by the framework for all of it's background auth stuff

And now that we have our AuthenticationHandler sorted out, we can inject this during setup so that the dotnet Authentication middleware is able to resolve and and use this new handler.

using Expedition.Auth.Cookies.AuthenticationHandlers;

using Expedition.Auth.Cookies.Services;

using Expedition.Auth.DependencyInjection;

using Microsoft.AspNetCore.Authentication;

using Microsoft.Extensions.DependencyInjection;

using Microsoft.Extensions.Options;

using IAuthenticationService = Expedition.Auth.Services.IAuthenticationService;

namespace Expedition.Auth.Cookies.DependencyInjection;

public static class ServiceCollectionExtensions

{

public static IServiceCollection AddExpeditionCookieAuth(this IServiceCollection services, string connectionString)

{

services.AddExpeditionAuth(connectionString);

var serviceDescriptor = services.FirstOrDefault(descriptor => descriptor.ServiceType == typeof(IAuthenticationService));

if (serviceDescriptor != null)

{

services.Remove(serviceDescriptor);

}

services.AddScoped<IAuthenticationService, CookieAuthenticationService>();

services.AddAuthentication(o =>

{

o.DefaultAuthenticateScheme = "ExpeditionAuthCookie";

o.DefaultChallengeScheme = "ExpeditionAuthCookie";

})

.AddScheme<AuthenticationSchemeOptions, ExpeditionCookieAuthenticationHandler>("ExpeditionAuthCookie", null);

return services;

}

}

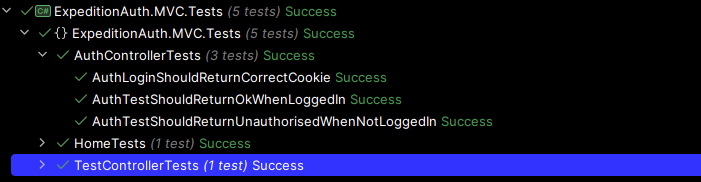

And now that that's in place, we should be able to run the tests and they should all work now.

And we have green across the board which confirms that we've now managed to hook correctly into the [Authorize] attribute and indeed it handles both non-authorised and authorised scenarios.

While this is now a fairly trivial example, in my next post I'll start building out the session management itself which will store sessions in a database as well as reinforcing performance by running a simple memory cache and providing functionality to manage and expire sessions when authenticated as the specific user.